Cloud storage - never fails to surprise

Public cloudstorage has a lot of crazy stuff in it. You can find a lot of different stuff, from privacy relevant stuff up to complete backups and keys for all services, including keys for AWS, Google Cloud and Azure.

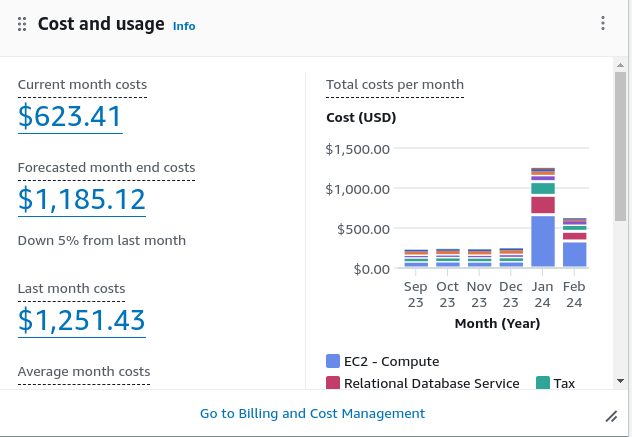

Some of keys or tokens would have allowed a complete organization takeover! As still a lot companies do not have a cost limit in cloud providers an attacker can cause a lot of damage.

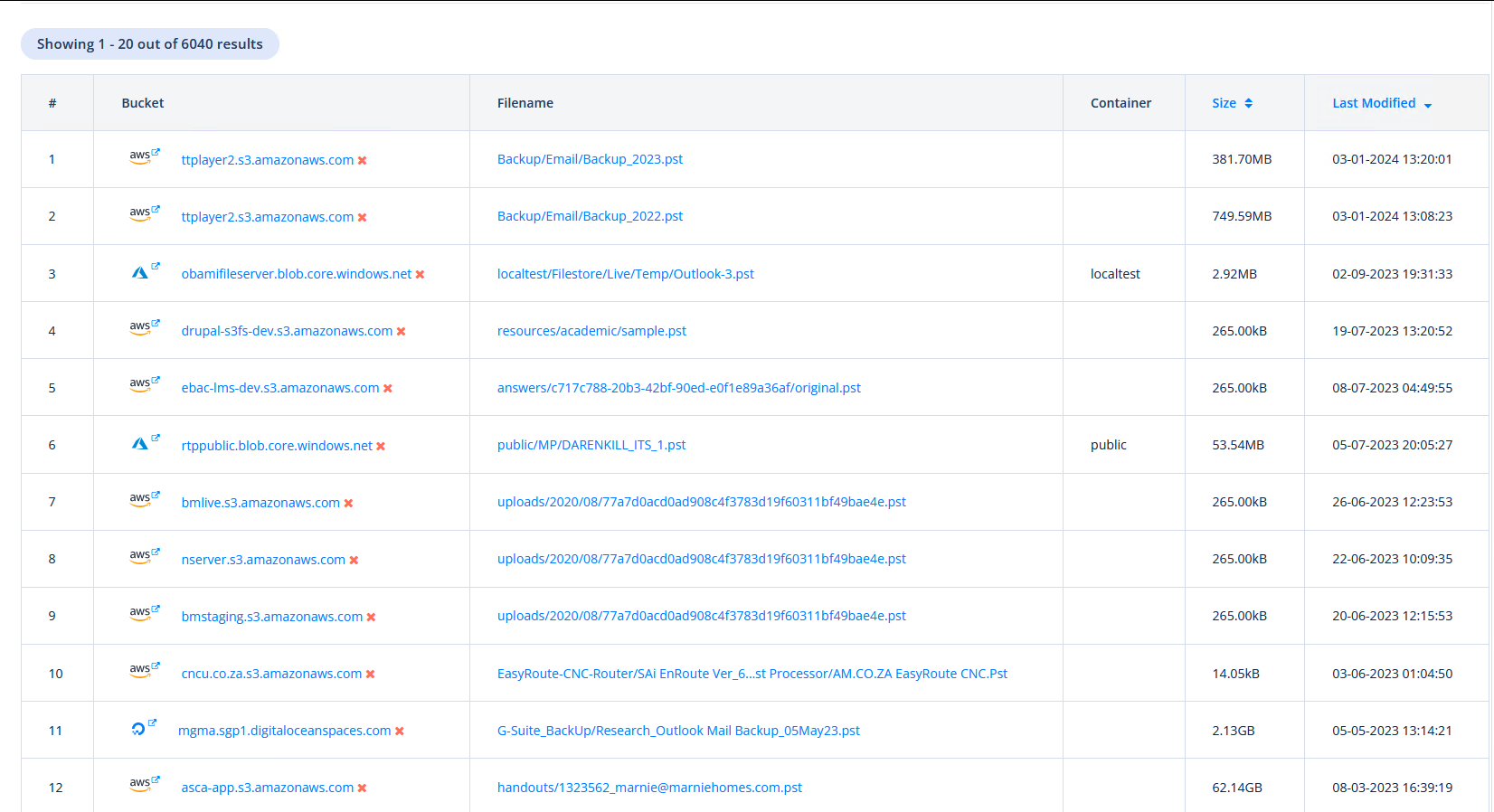

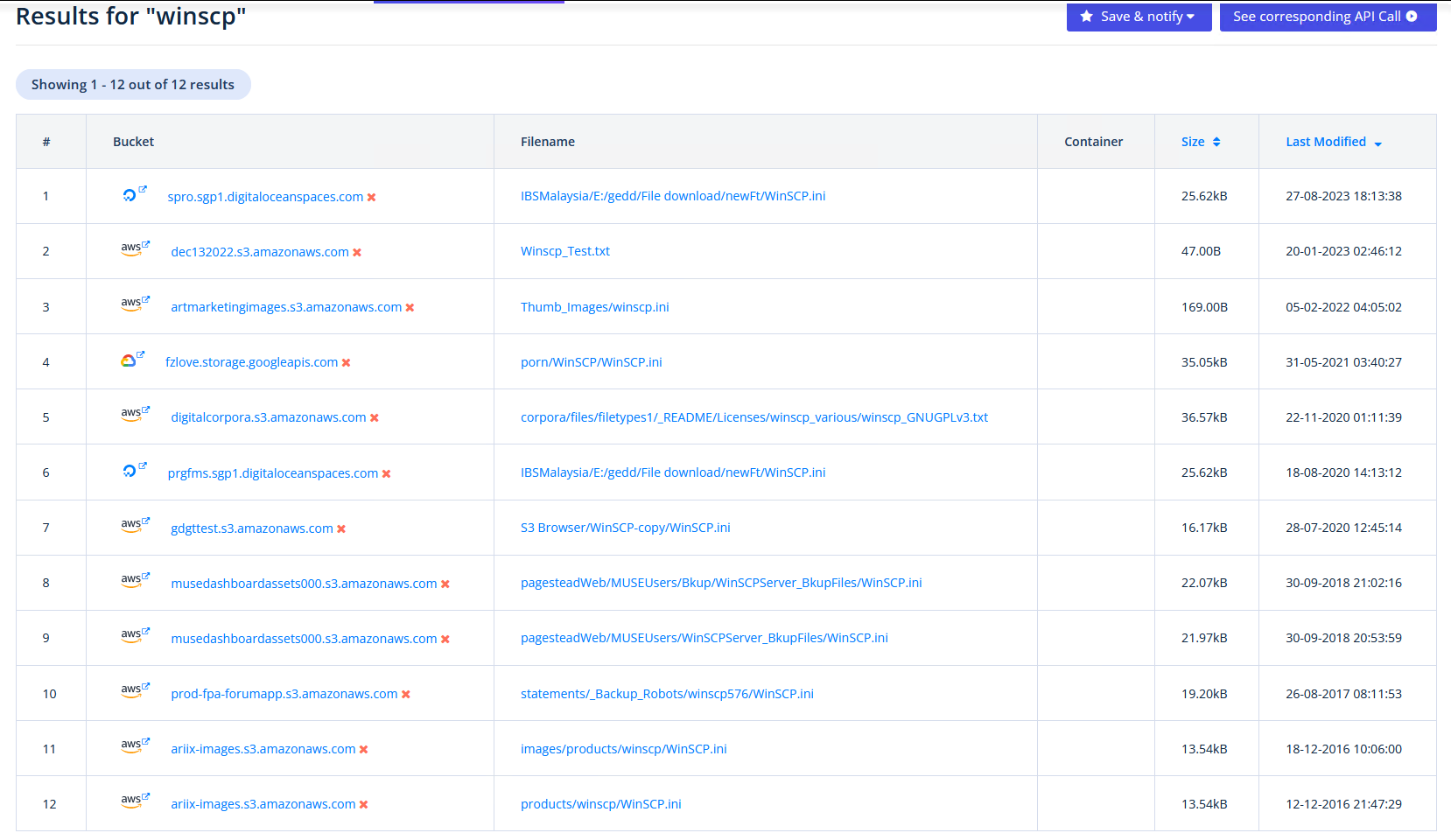

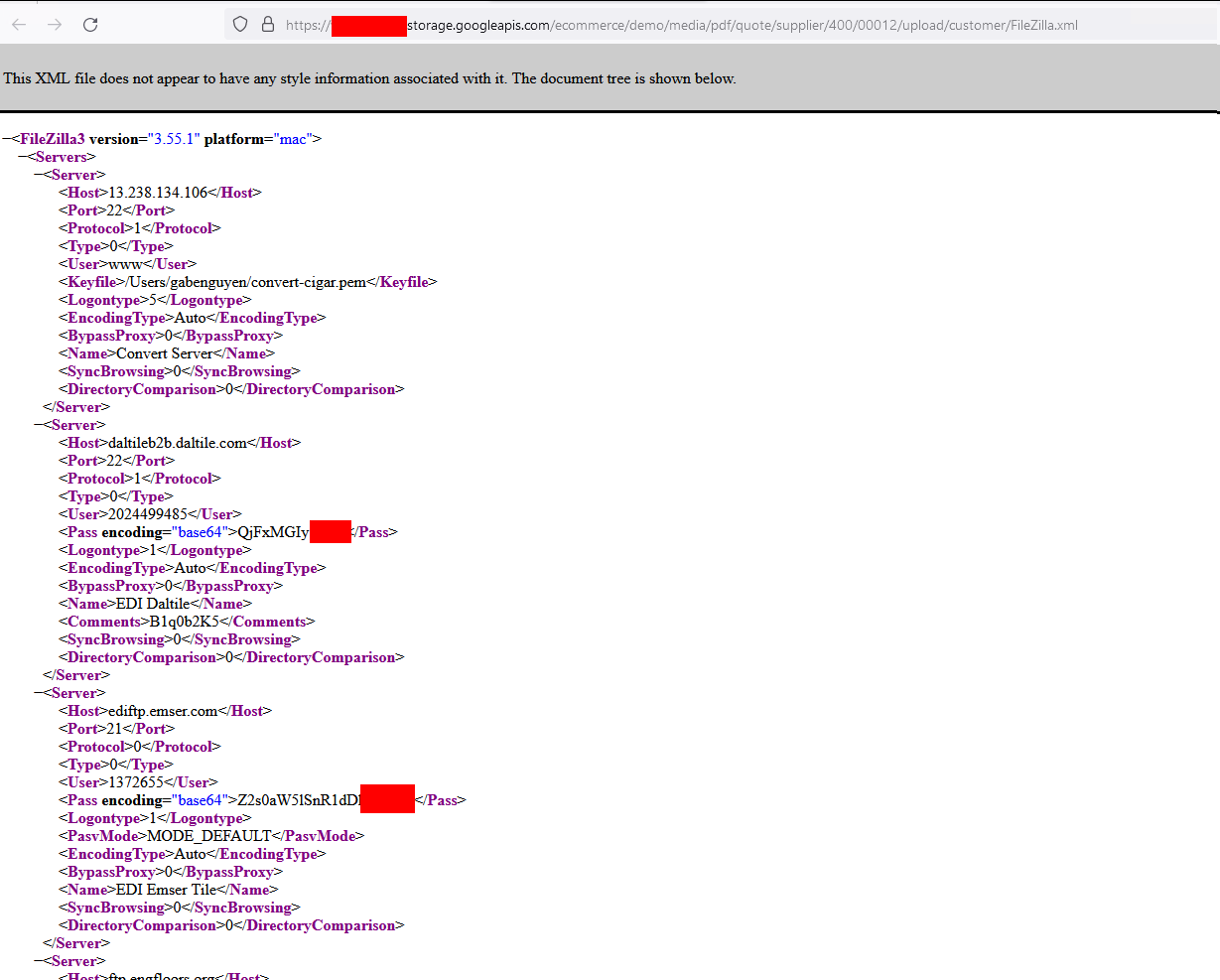

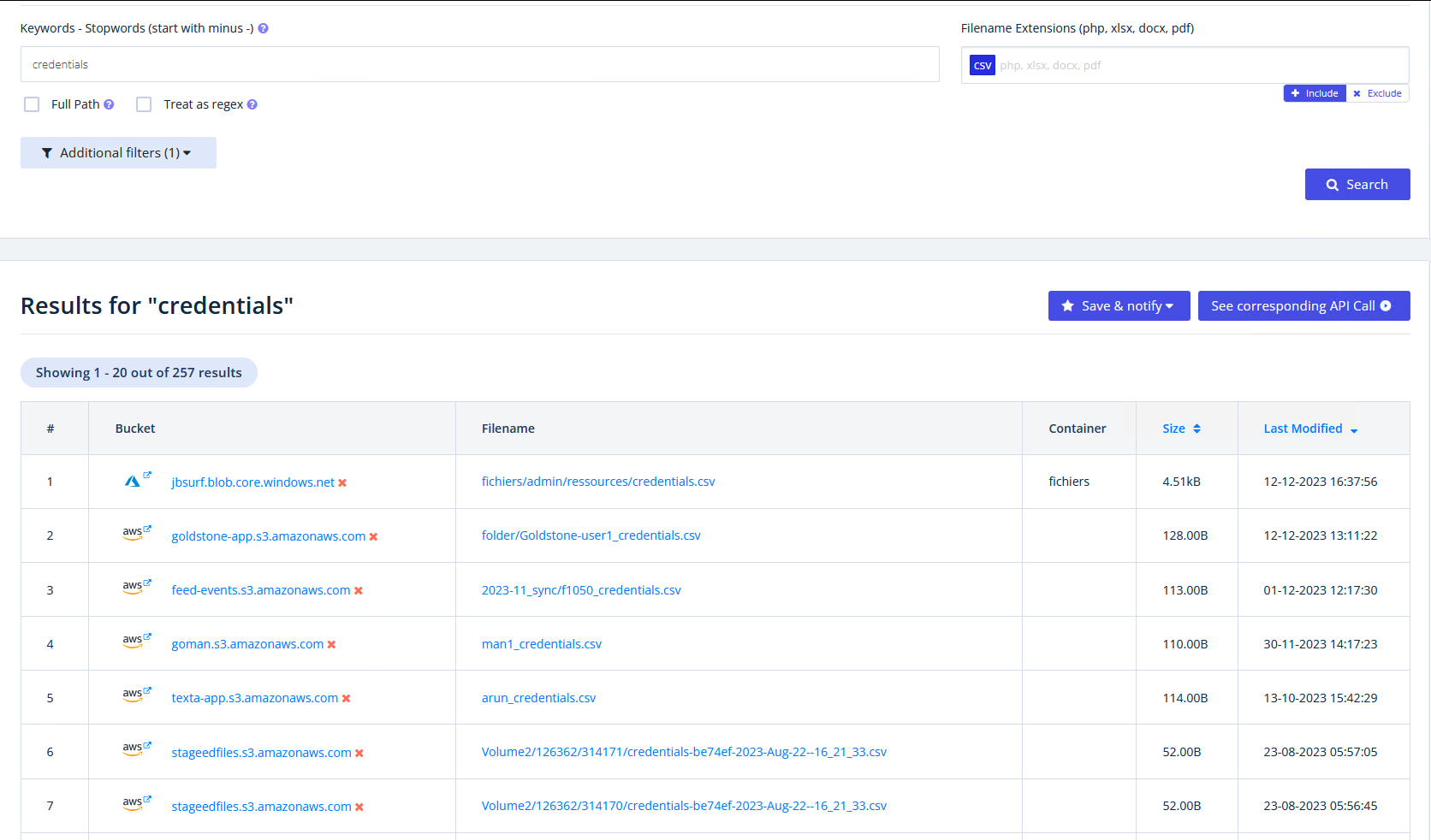

To identify most of the stuff, we are almost exclusevly using https://grayhatwarfare.com/

Exposed sensitive data everywhere

Exposed sensitive data everywhere

Motivation

This is not a new issue and around a long time. I am providing most of the search links and also only redact the most critical things, as the typical reader here should be able to restore this within seconds and as I respect our time the links are included.

It is very difficult to report this stuff as the owner is in most cases not clear. And breaching an AWS org, to get the billing address might be a little bit out of scope.

I came up with the idea to abuse the github secret scanner to at least invalidate leaked api keys by simply uploading it to github.

Regarding this blogpost: https://xebia.com/blog/what-happens-when-you-leak-aws-credentials-and-how-aws-minimizes-the-damage/ AWS will add a policy to the leaked user. However this still does not block access to all ressources immediatly and therefore it is not sufficant to report leaked keys, as I would just collect them for others…

If anybody knows a way to securely report leaked API Keys, please let me know

The following chapters will be a sample gallery of what stuff can be found a biref explaination why it shouldn’t be there. Keep in mind that this is always only a small amount of the stuff which can be found.

It is important to mention, that not all things which can be found are still valid or also might also be honeycreds, or otherwise heavily monitored

Private Stuff

Let’s start with the privacy relevant stuff.

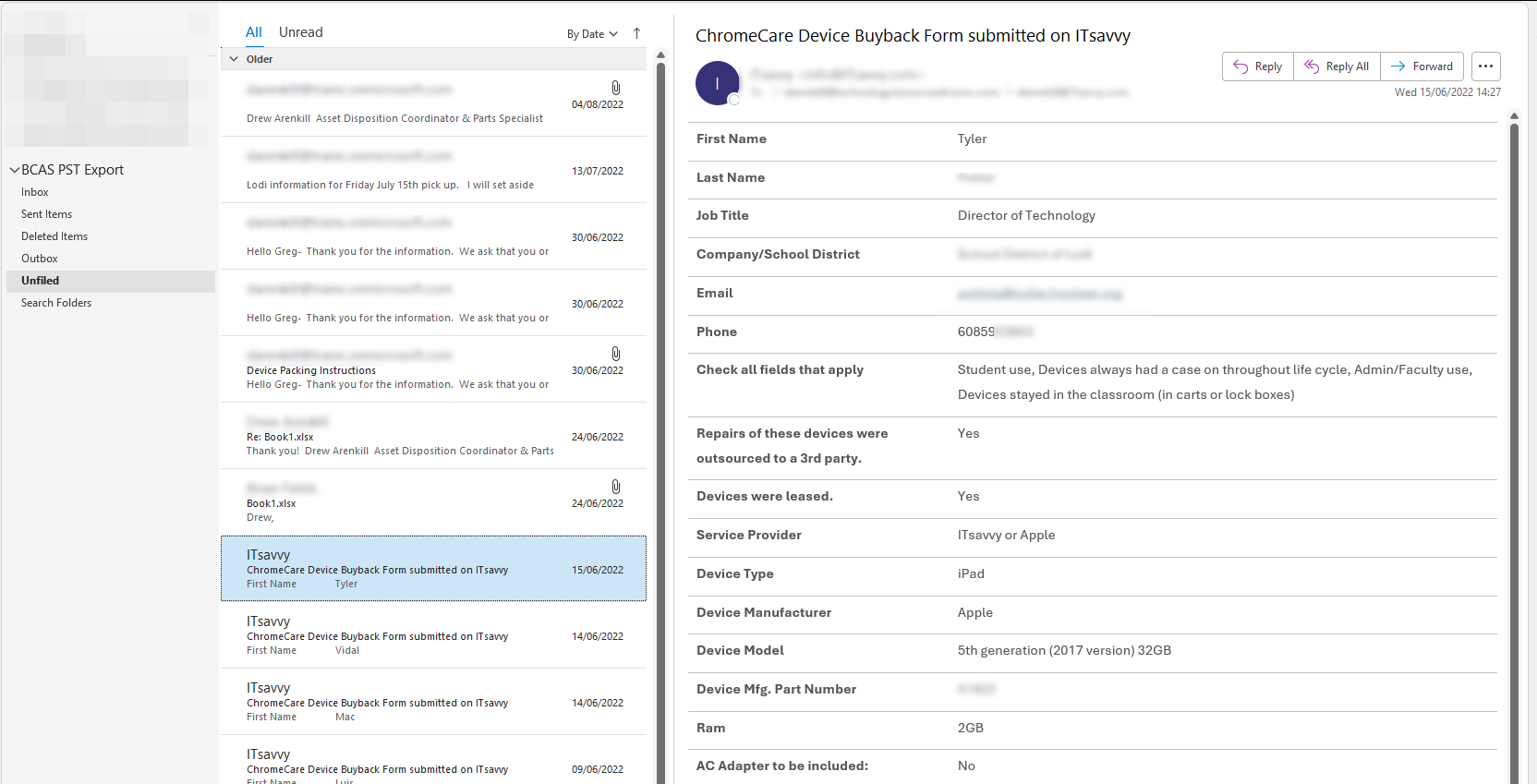

Outlook accounts and emails (pst, eml, msg)

PST files can be imported in Outlook and can be complete Email accounts.

The same goes for msg and eml files, which are a single email. Why do you backup a single email? Because it is important …

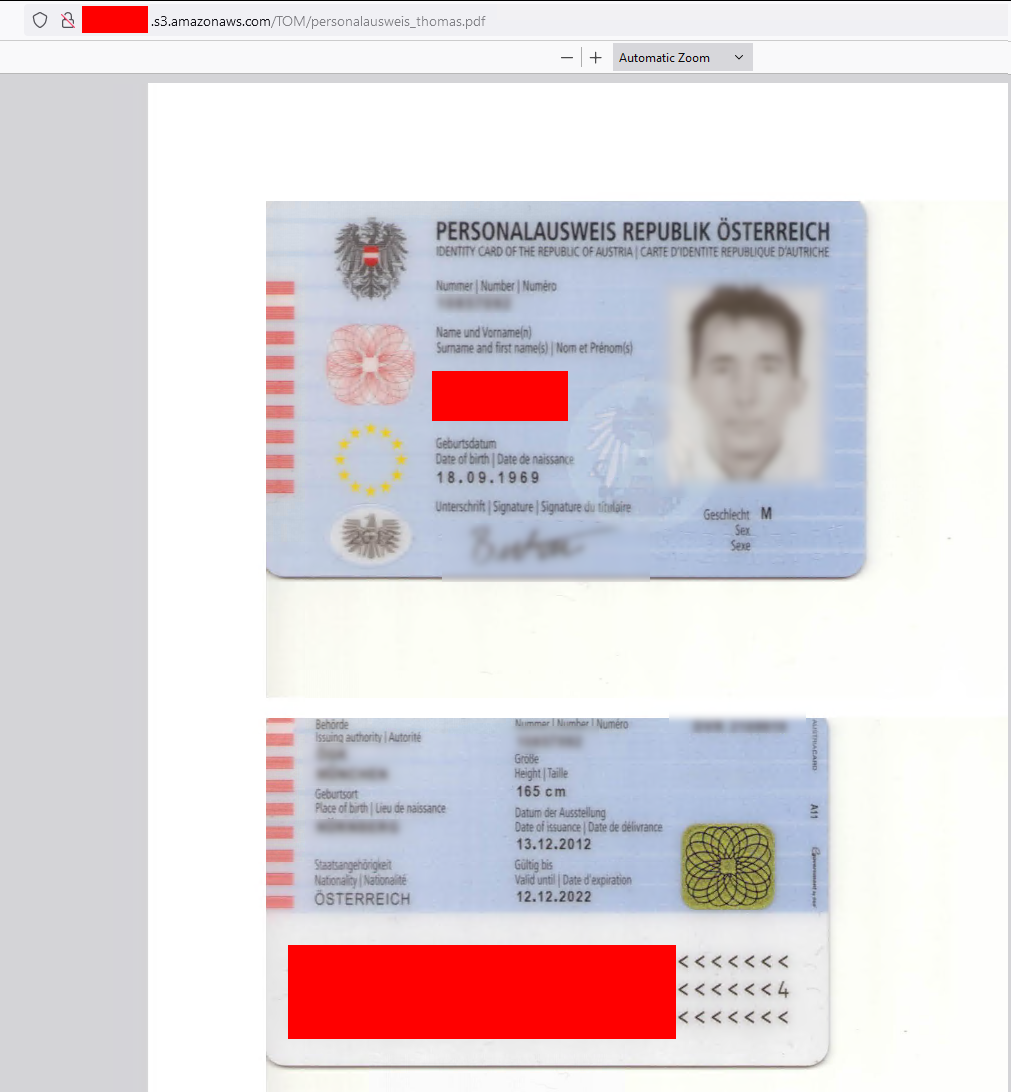

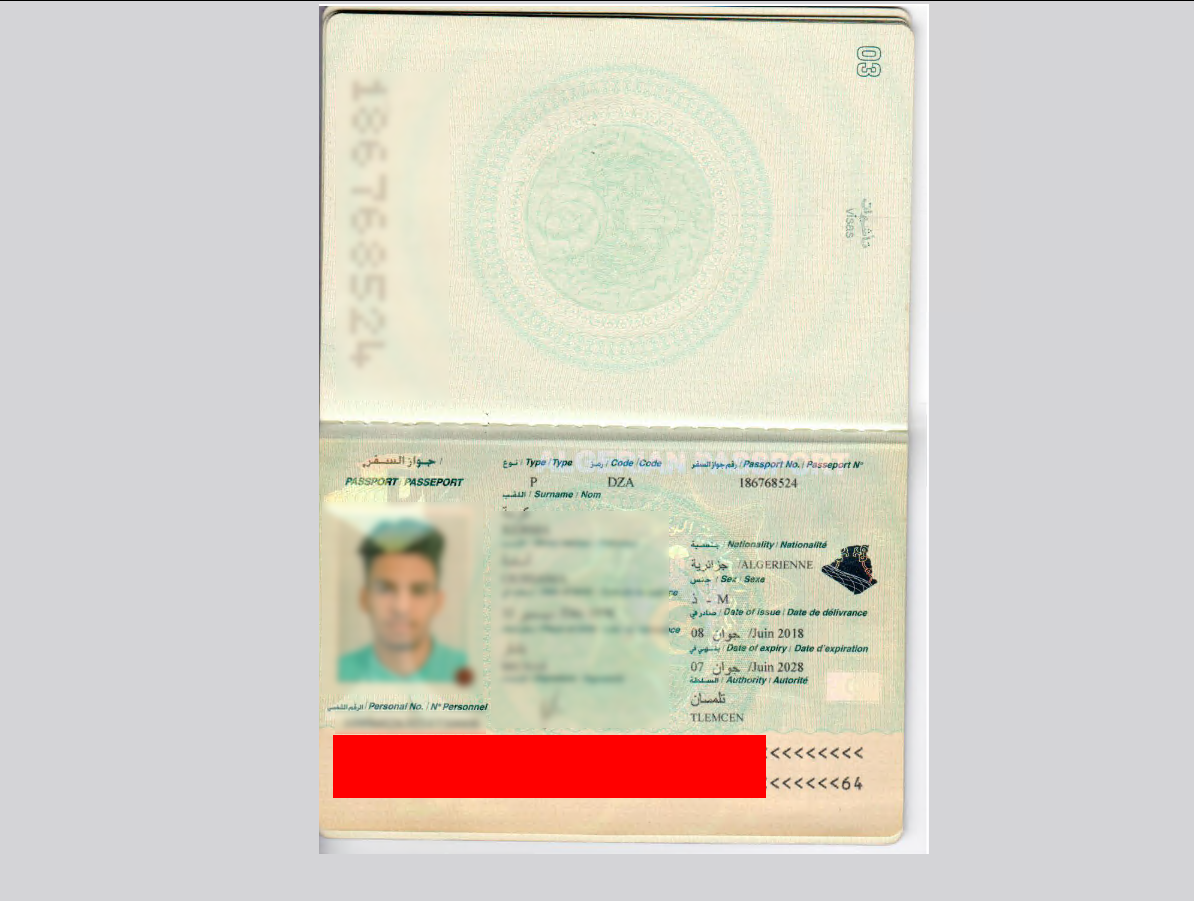

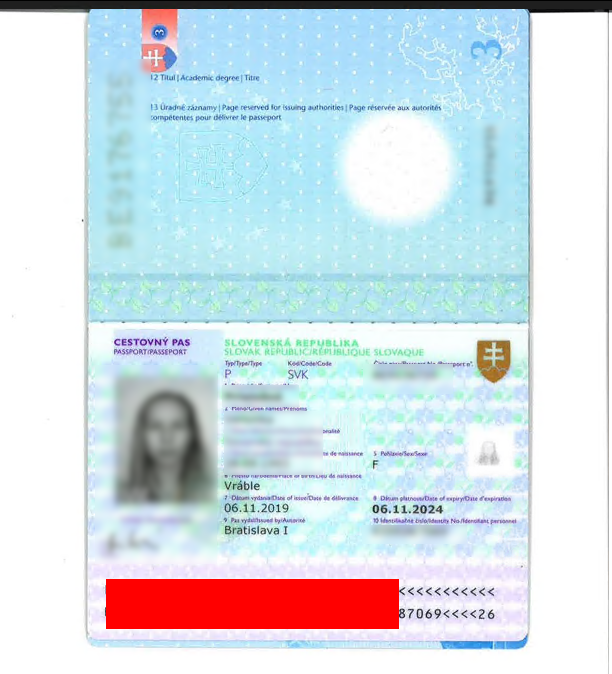

Passports

Personalausweise (German / Austrian ID Cards)

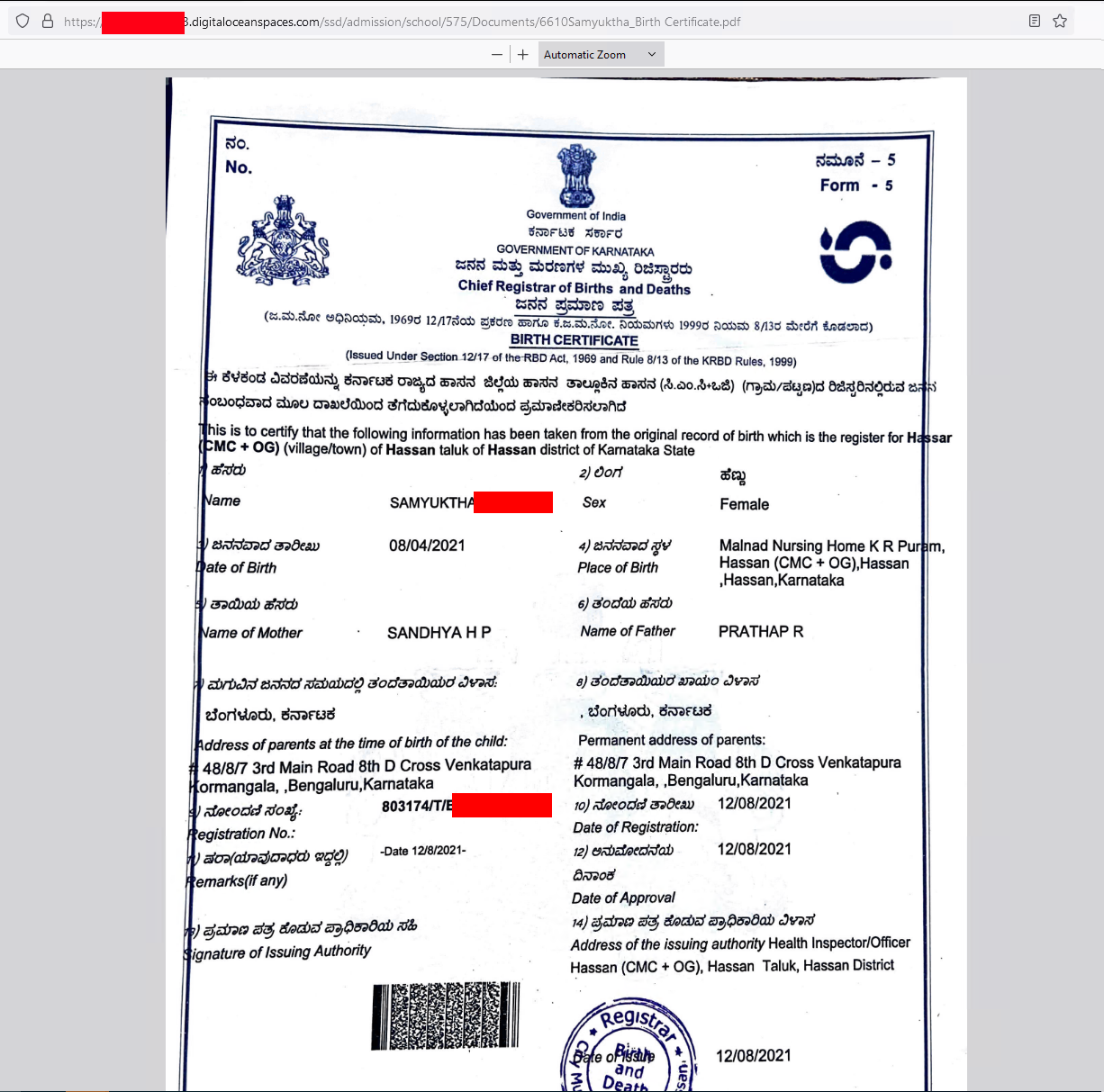

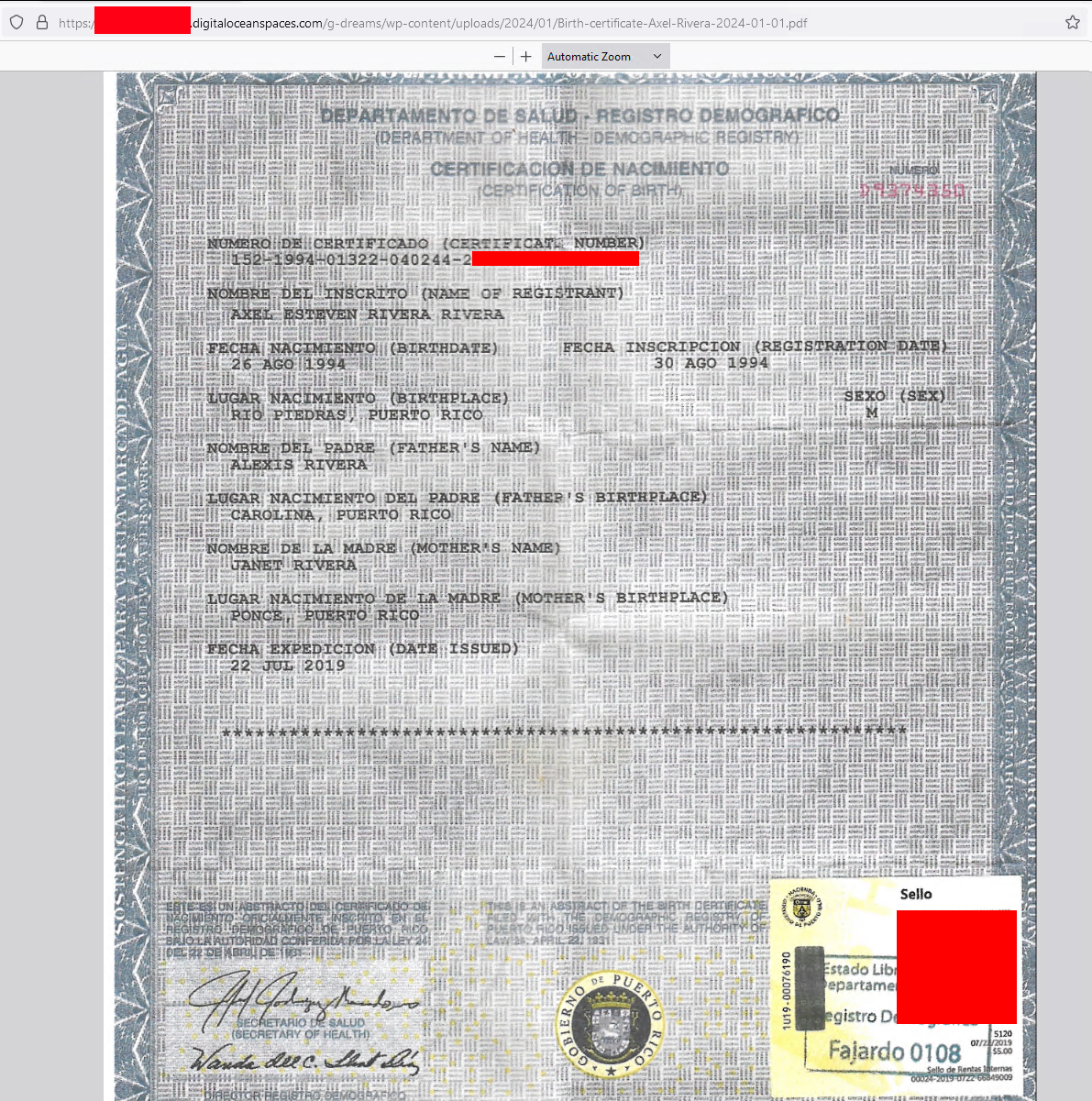

Saving Passports and ID documents is illegal in several countries.

Other

Certificate of Birth

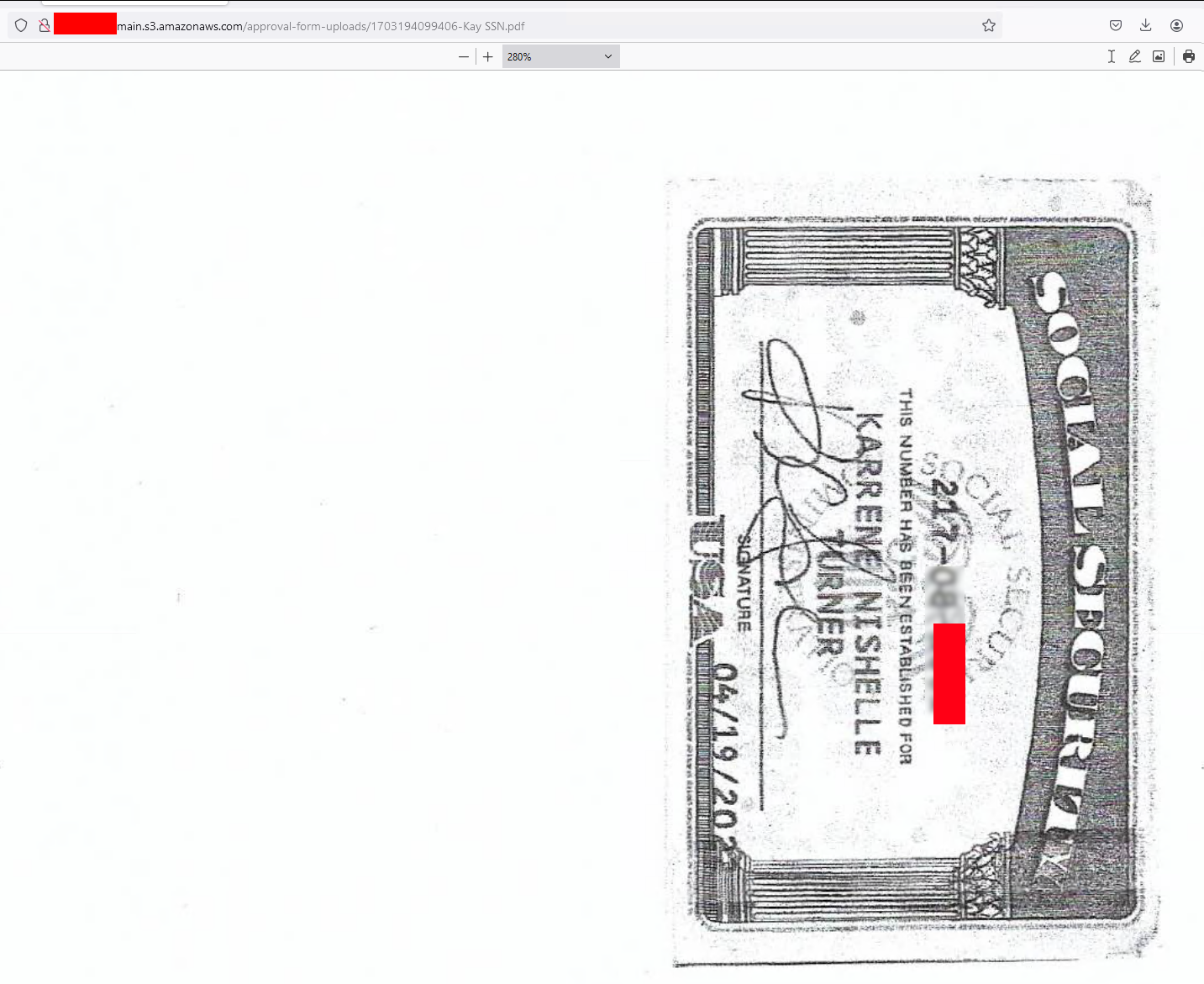

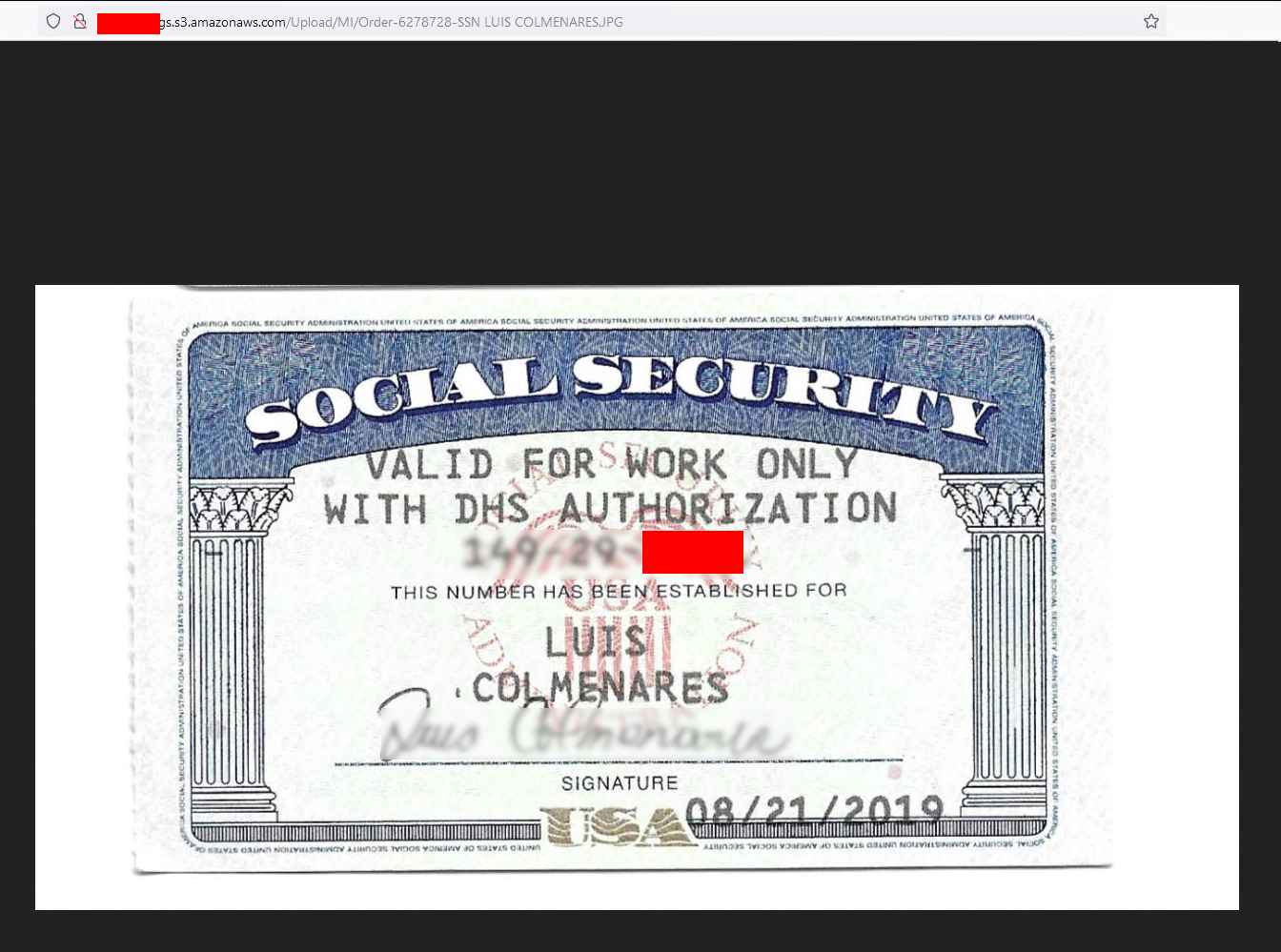

SSNs

Some US Social Security Numbers…

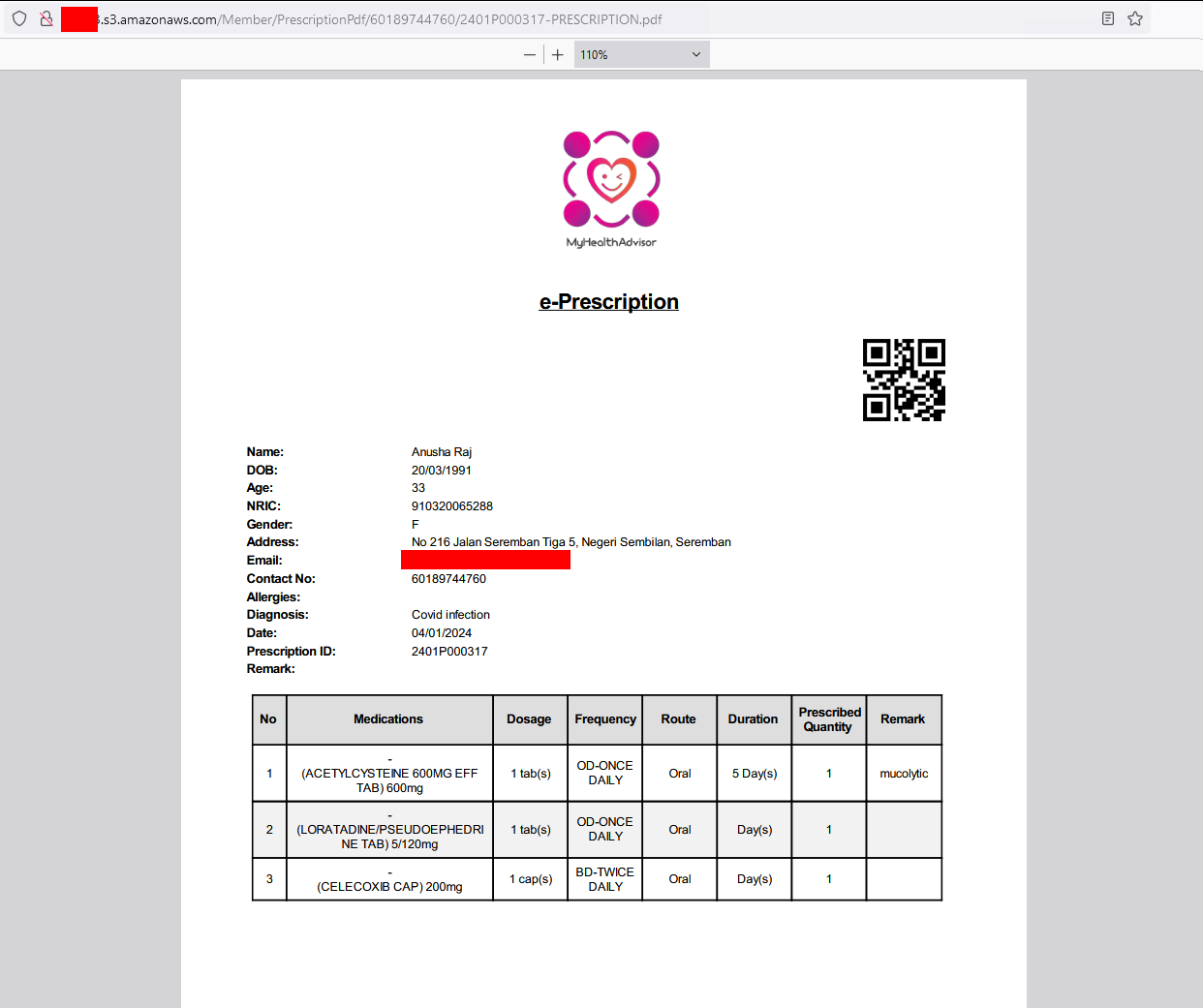

Prescriptions

Medical data and prescriptions

Prescriptions

Prescriptions

It seems that there is quite a big bunch of company data available, including video call recordings, prescriptions, medical records, …

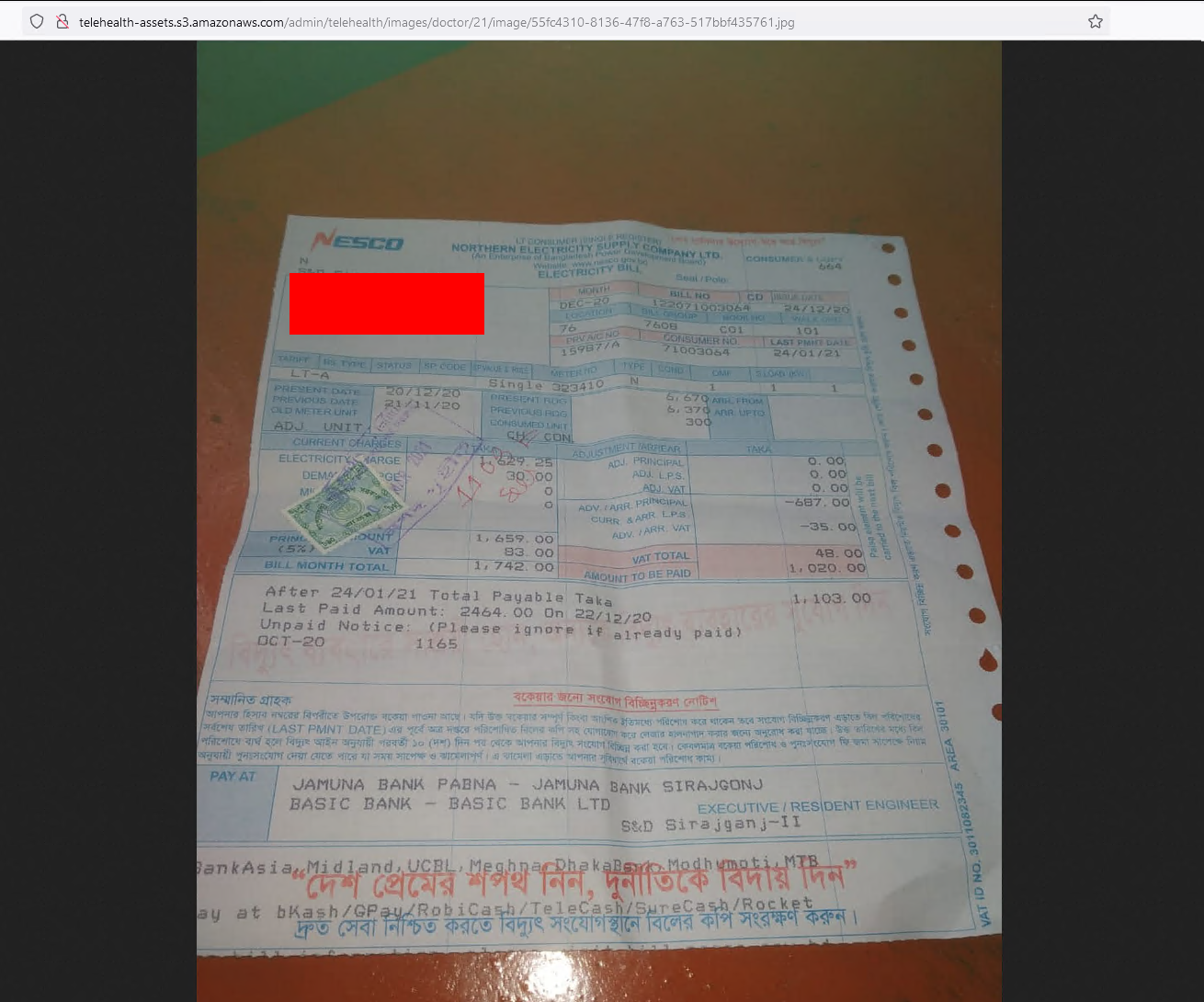

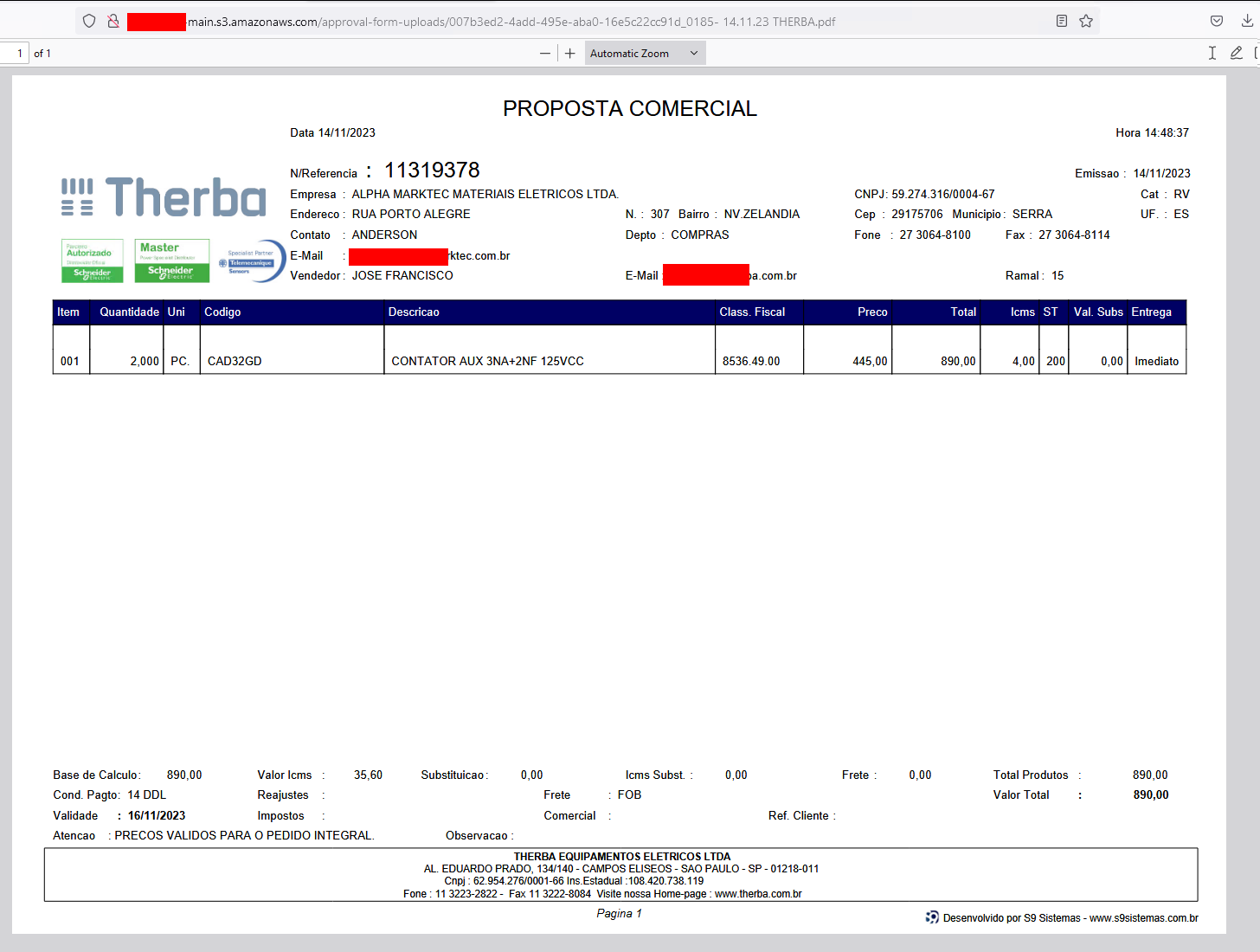

Bills and customer data

Of course there are also bills and customer data directly availible. This is ofc bad, but it gets worse when it comes to database dumps.

Polices

https://buckets.grayhatwarfare.com/files?keywords=versicherung&fullpath=1&extensions=pdf,png,jpg,jpeg&order=last_modified&direction=desc&page=1

insurance policies

insurance policies

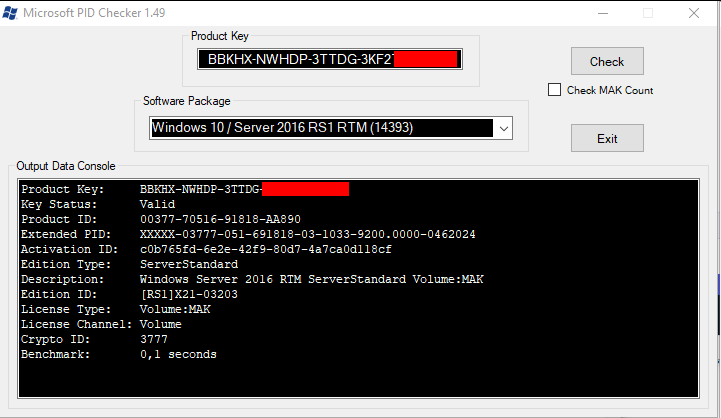

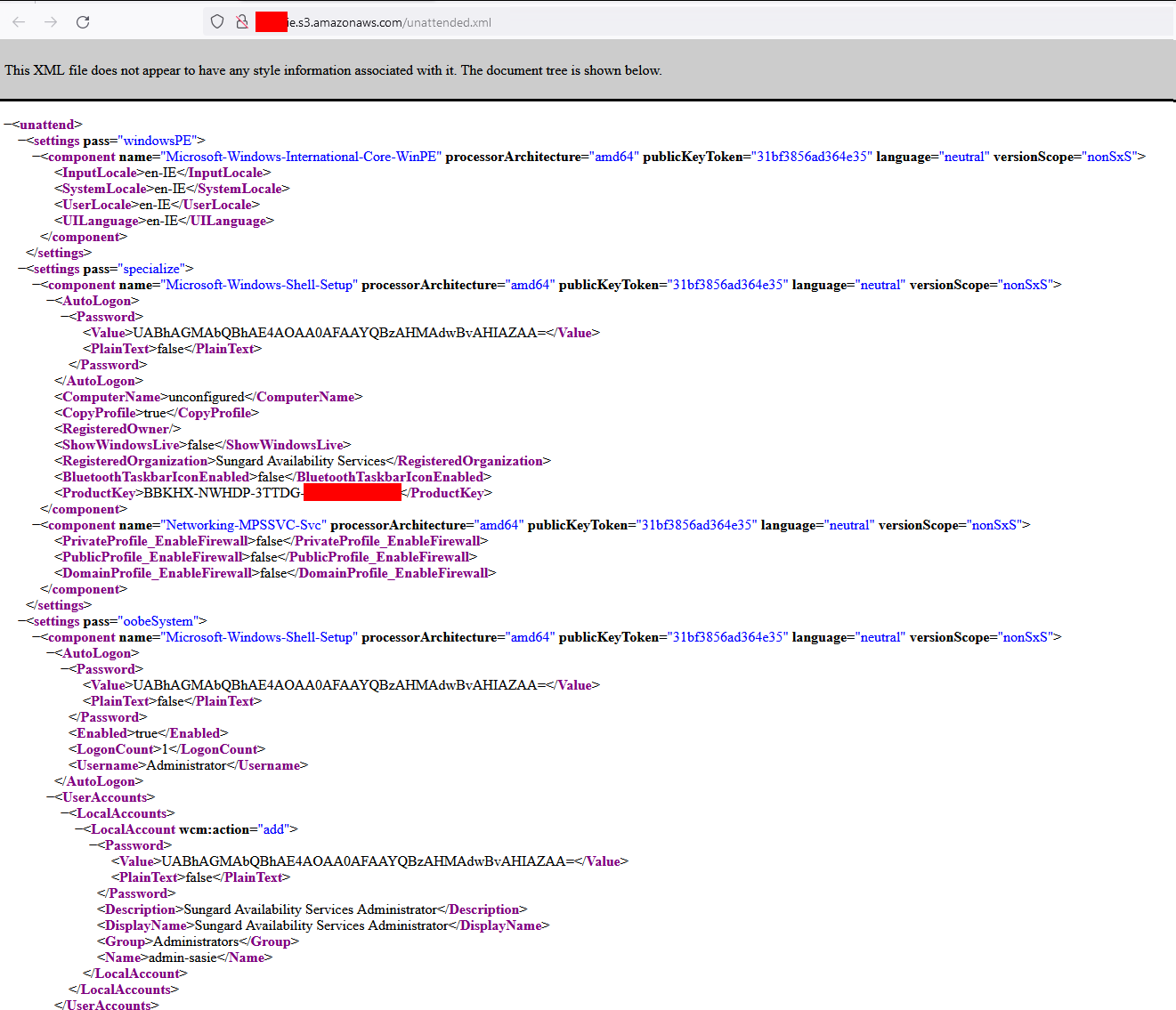

Licenses and stuff

There are also a lot of license files and keys available. For example, a Windows Server 2016 from an unattended.xml.

unattended.xml with some passwords and a Windows Server key

unattended.xml with some passwords and a Windows Server key

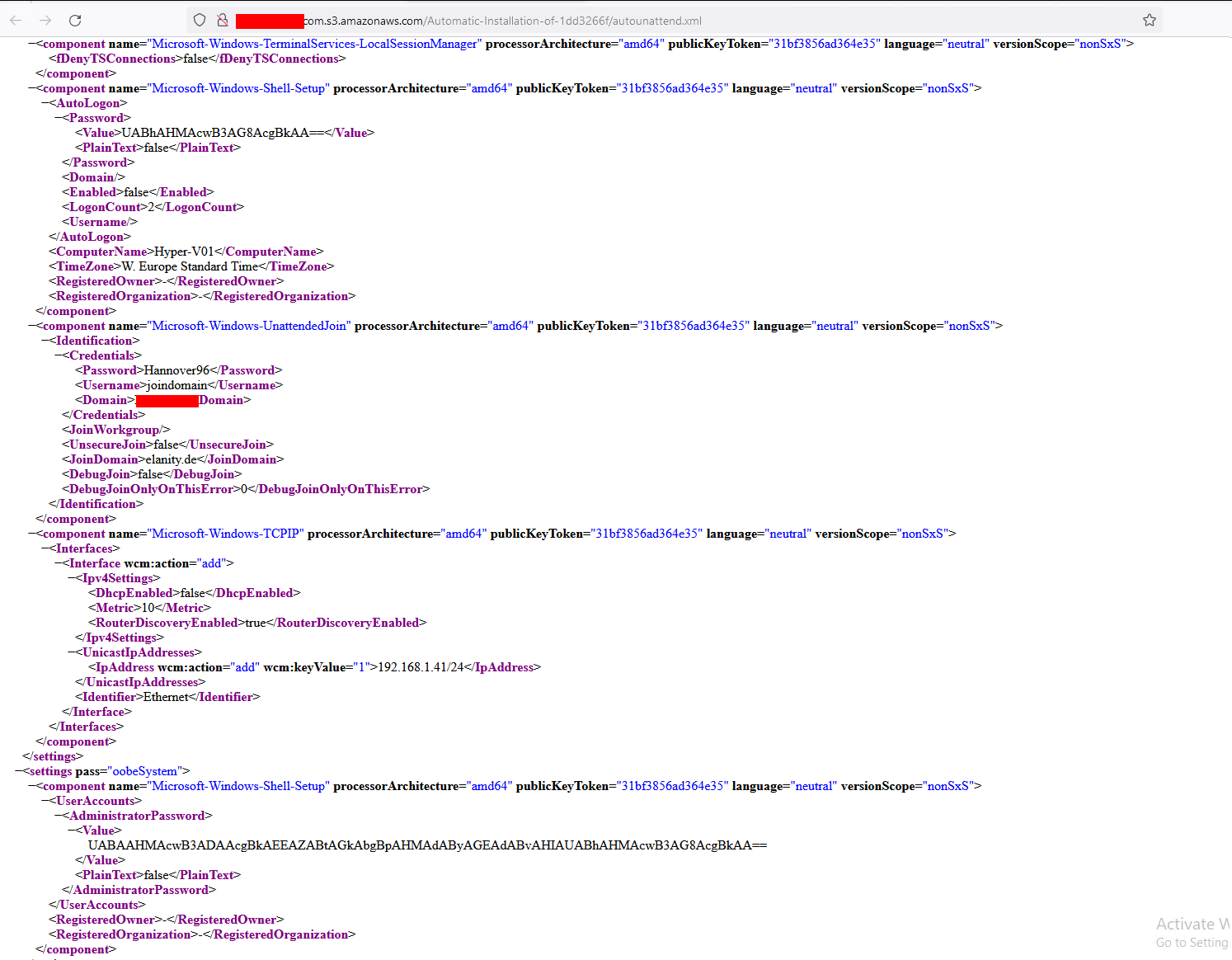

Another one, with additional domain join credentials

Another one, with additional domain join credentials

Credentials

When it comes to credentials it is just a matter of finding juicy files. It is possible to get some inspiration from tools like Snaffler, which does an excellent job in finding secrets OnPrem.

Default file names with credentials

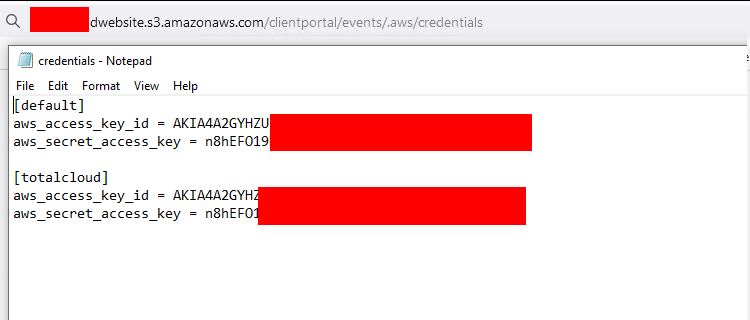

AWS - credential.csv

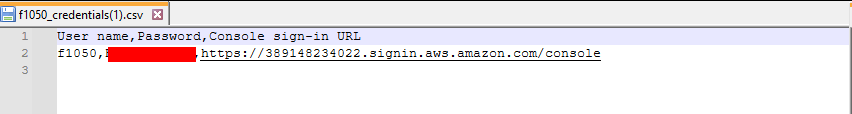

If a new IAM user is added in AWS, a credentials.csv file will be generated with the credentials.

CSV files as build when adding an IAM user in AWS

CSV files as build when adding an IAM user in AWS

A quick look by my friendo personal AWS Magician @rootcathacking, as i have no glue about AWS, revealed that the IAM roles are wrongly configured and the complete organization could get compromised.

If you want some deeper random AWS knowledge, visit his blog: https://rootcat.de/blog/

Of course, they are working and a complete Organization could get compromized

Of course, they are working and a complete Organization could get compromized

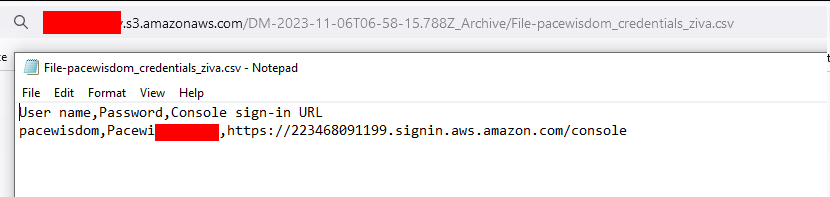

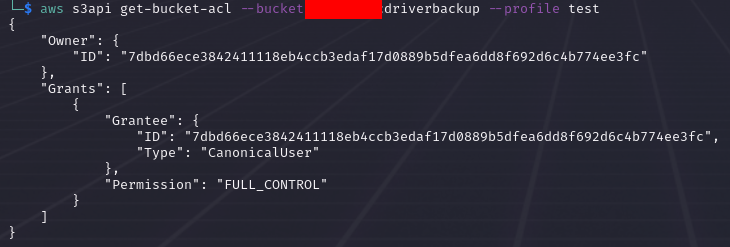

AWS Keys

But those credentials are for sure invalid or? Sure…

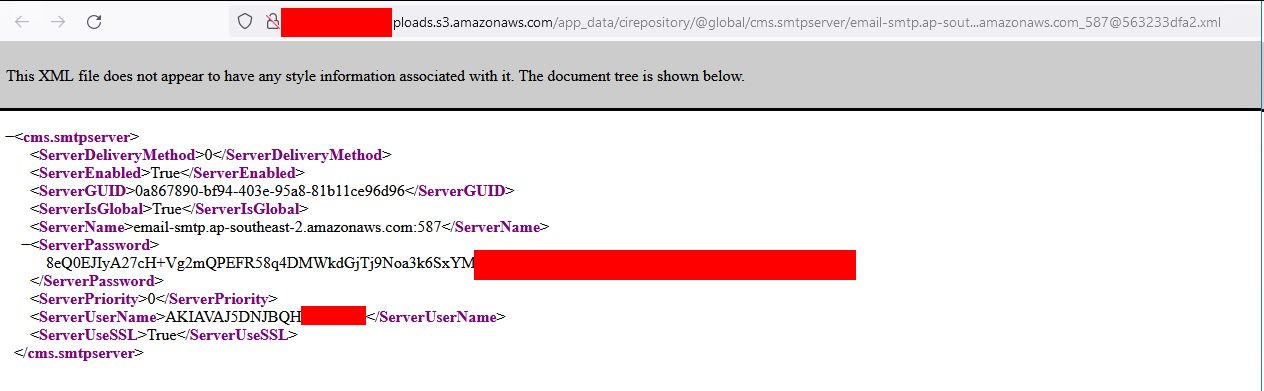

AWS SMTP Creentials

AWS SMTP Credentials

AWS SMTP Credentials

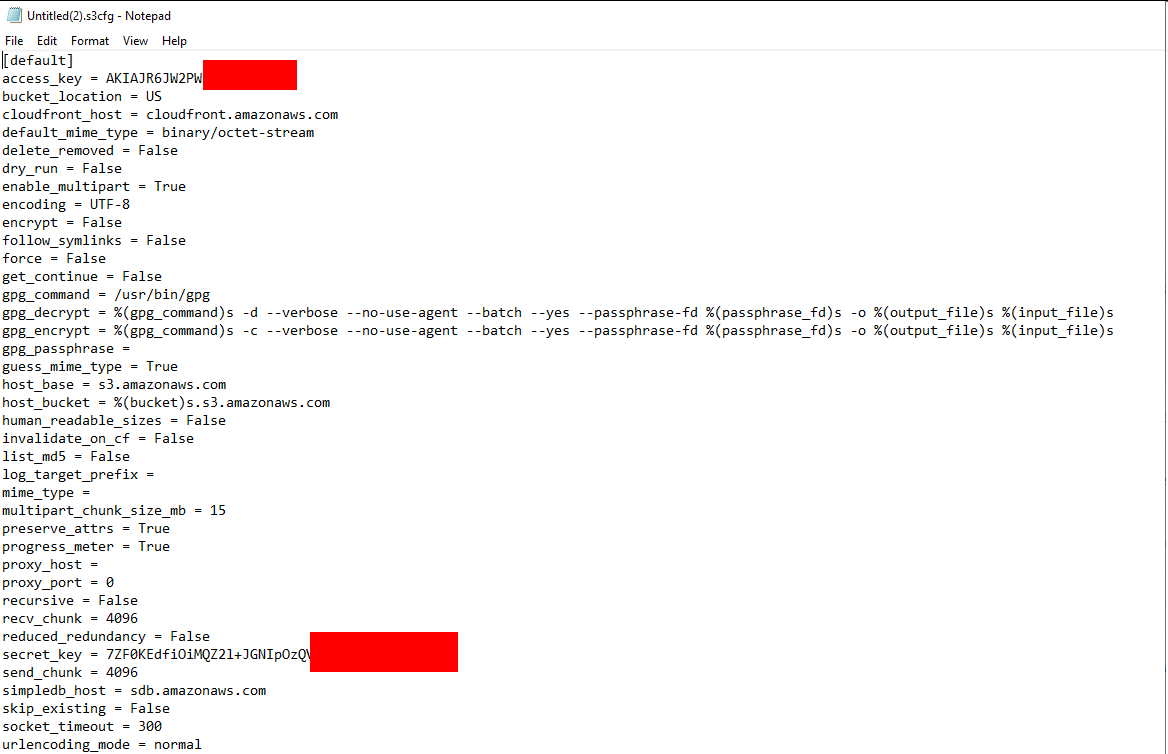

s3conf

Extension for mounting a S3 Bucket

Extension for mounting a S3 Bucket

Azure Token

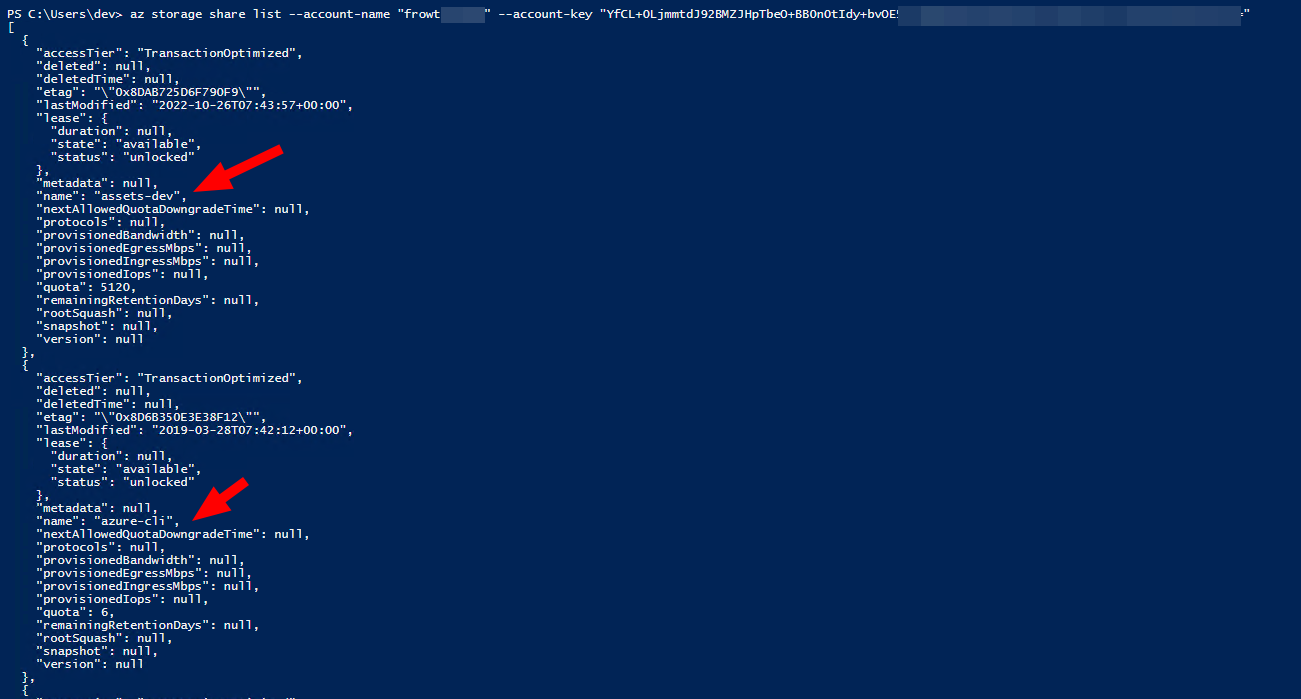

I also found a bunch of Azure service accounts, e.g. for the blob service. To verify those credentials we can use Azure CLI and query it like this.

az storage share list --account-name "frowt######" --account-key "YfCL+0LjmmtdJ92BMZJHpTbeO+BB0n0tIdy+bvOE5xiJuMUG2ItHFyNou1ehl75u4p######################"

az storage file list --share-name "assets-dev" --account-name "frowt######" --account-key "YfCL+0LjmmtdJ92BMZJHpTbeO+BB0n0tIdy+bvOE5xiJuMUG2ItHFyNou1ehl75u4p######################""

Valid Blob credentials for Azure

Valid Blob credentials for Azure

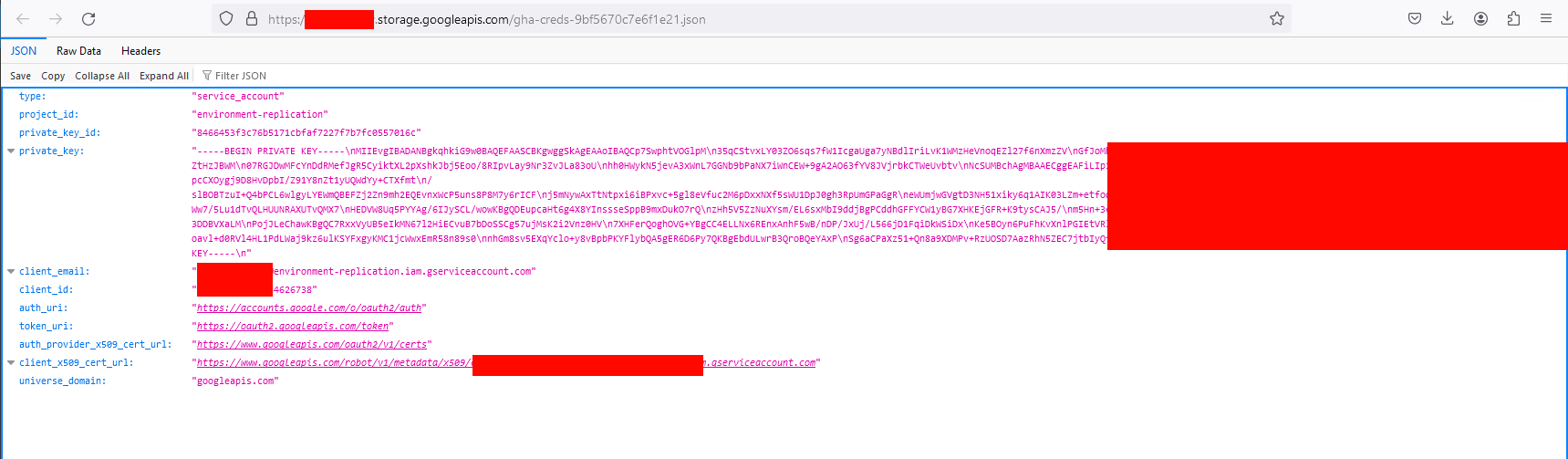

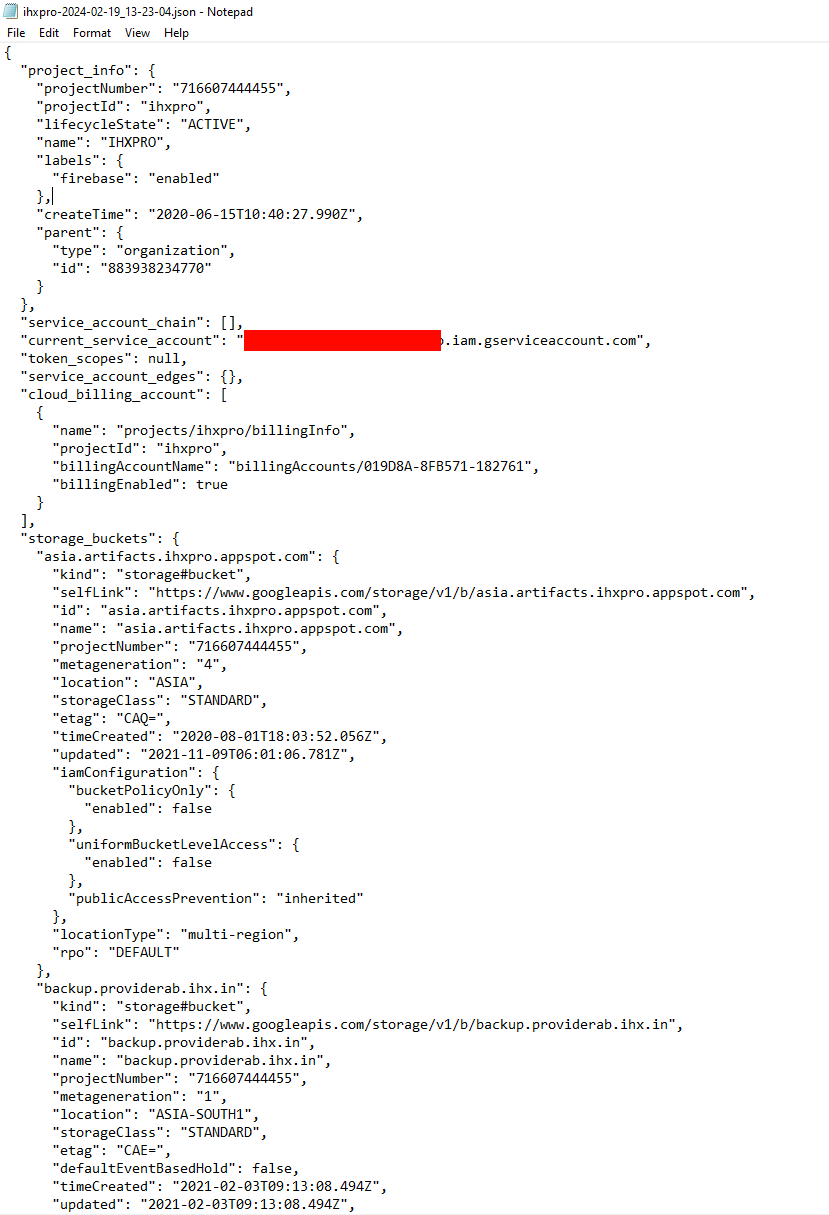

Google Cloud

We can for example search for gha-creds.json files. Those files hold Google Cloud Service Account token.

The gcp_scanner is really handy, if you want to enumerate a token and it’s access.

And ofc we again get some valid tokens…

Valid credentials and a report from the gcp scanner

Valid credentials and a report from the gcp scanner

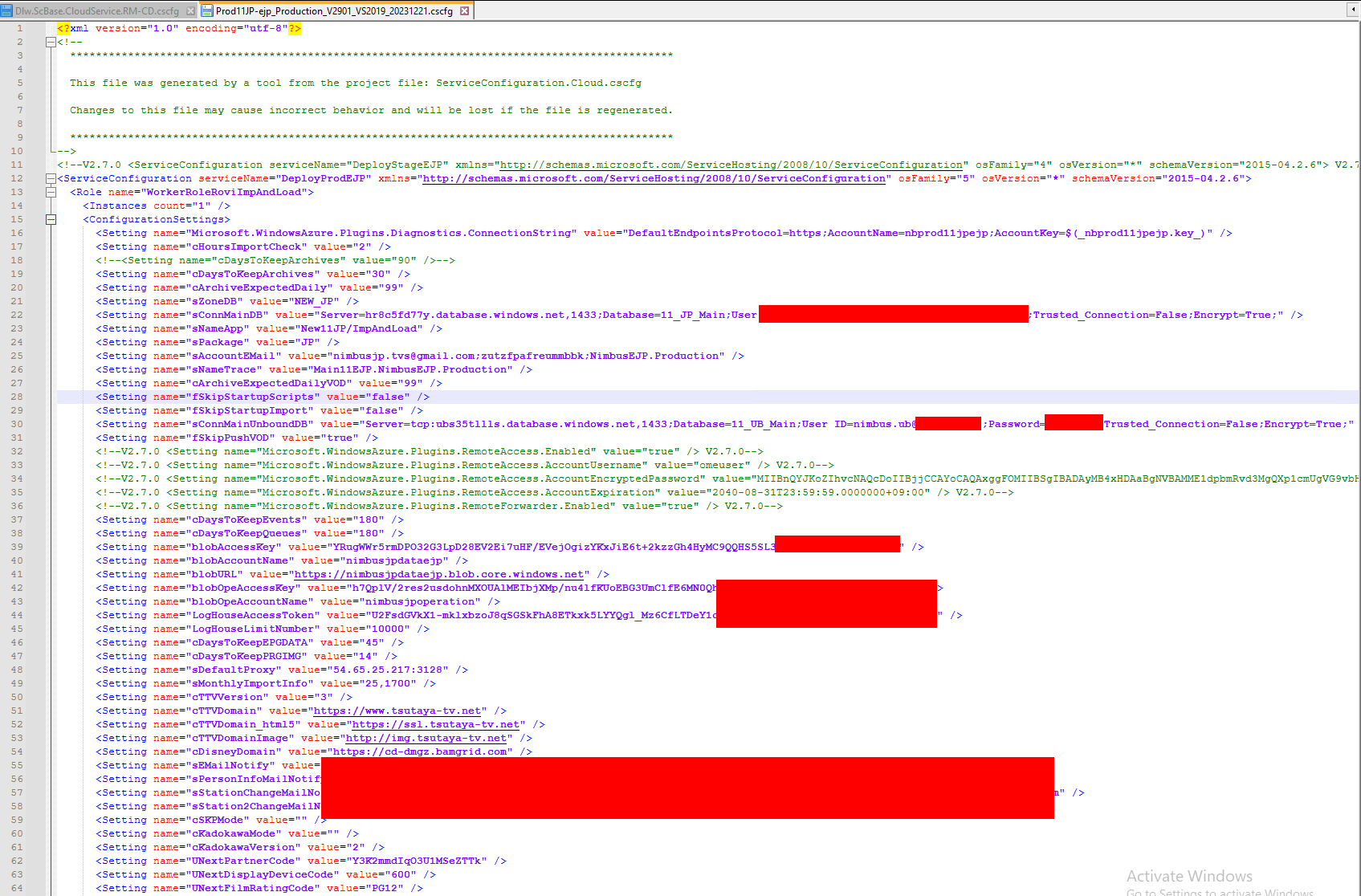

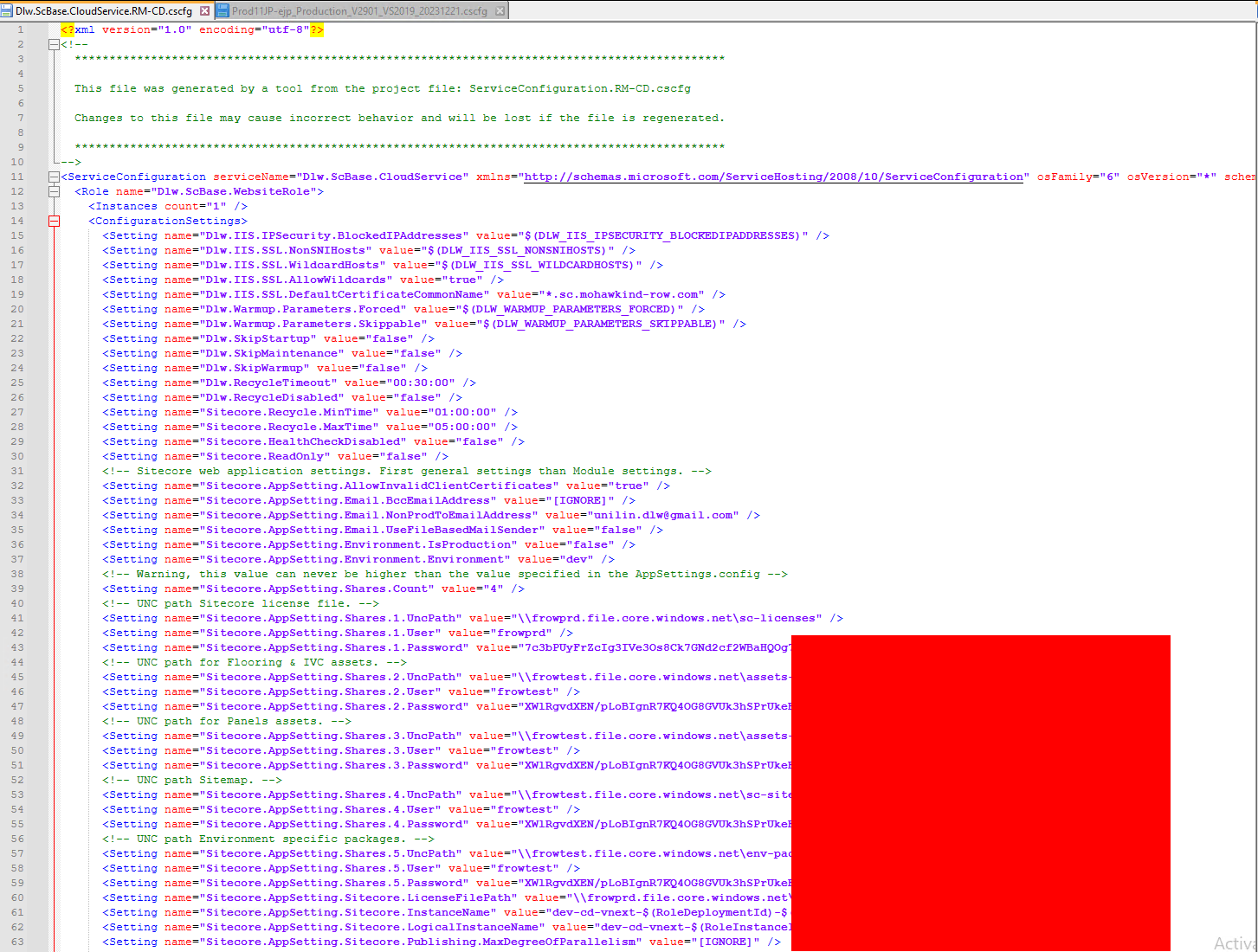

CSCFG - Azure Cloud Services (classic) Config Schema

Those cscfg files hold a crazy amount of different credentials. Most but not all credentials are related to Azure ressources.

Certificates

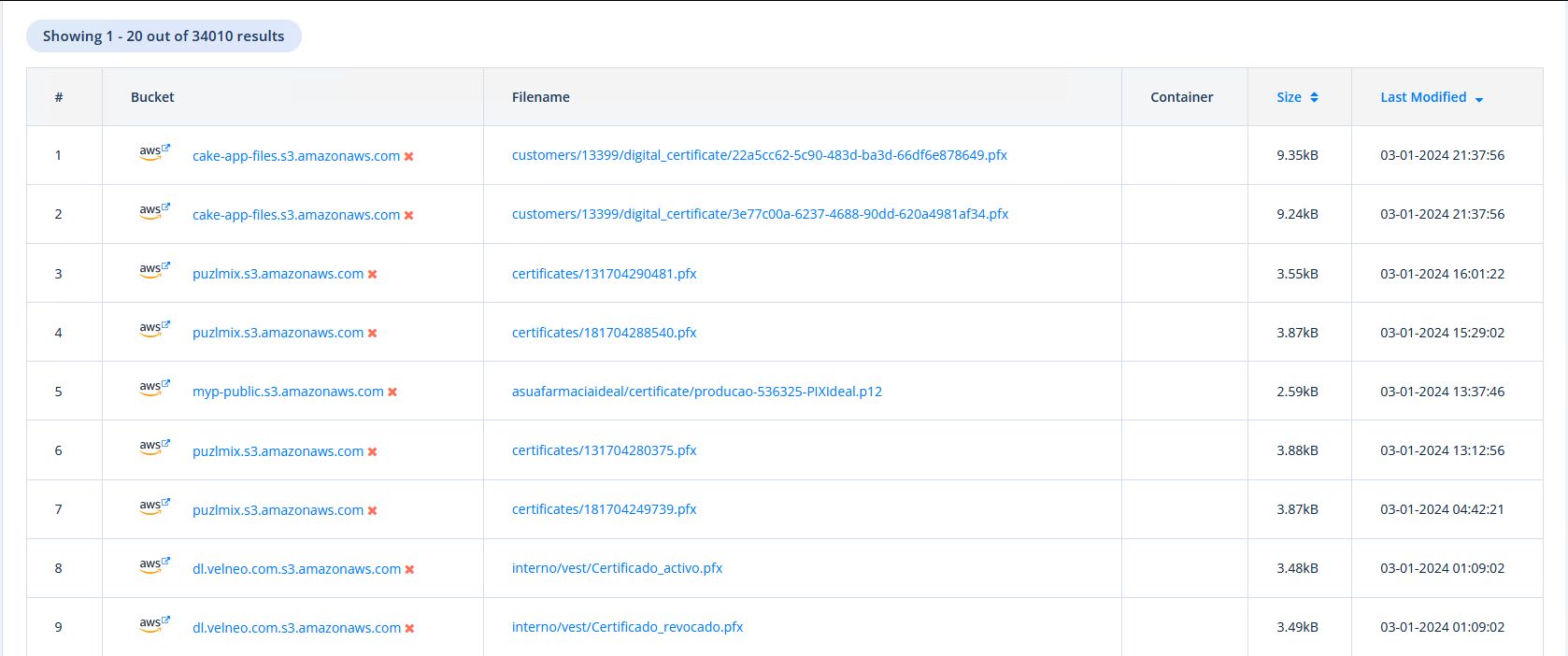

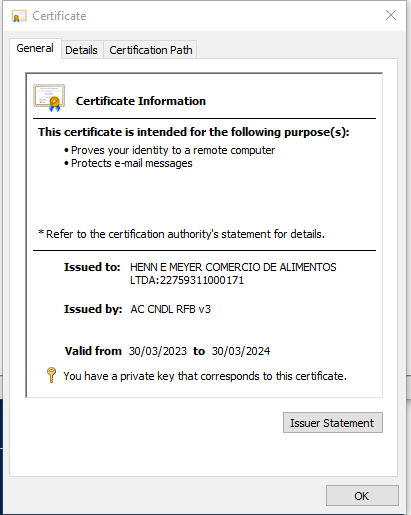

Over 30k hits for .pfx and .p12 certificates. Why those two types? In contriary to .der, .pem, .key files we know the purpose and mostly an url for those certificates. If we get a private certificate or key, it is nice, but quite useless if we do not know where to use …

Generally, it would be possible to try some passwords for the certificates and there might be some interesting ones.

Most of the certificates with a weak password are for Client Authentication and Secure Email, e.g. for SMIME.

We can easily get over 200 valid! certificates, like this one.

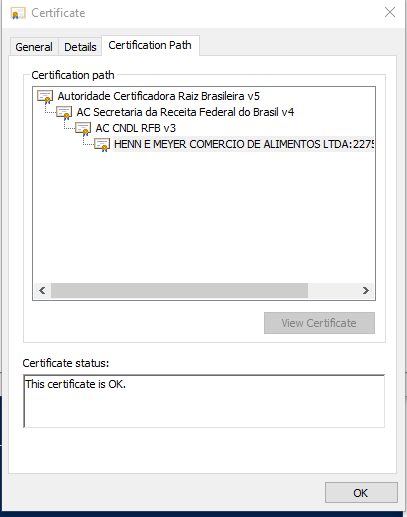

Typical securemail and identity certificate

Typical securemail and identity certificate

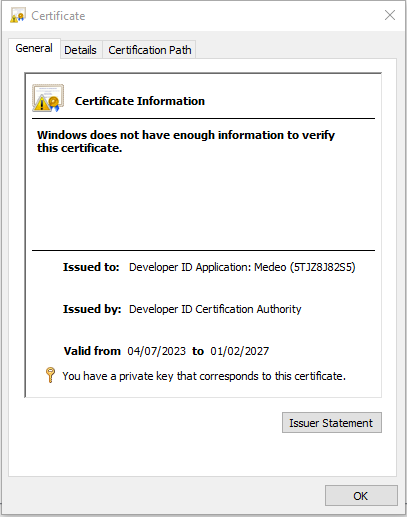

There are some other quite interesting certificates, e.g. Code Signing for Apple Developers.

kligo-cert.p12 PW: #####

Code Signing (1.3.6.1.5.5.7.3.3)

01/02/2027

False

C=US, O=Apple Inc., OU=Apple Certification Authority, CN=Developer ID Certification Authority

PS > $cert = New-Object System.Security.Cryptography.X509Certificates.X509Certificate2

PS > $cert.Import("$pwd\kligo-cert.p12",'#####','DefaultKeySet')

PS > $cert.ToString()

[Subject]

C=US, O=Medeo, OU=5TJZ8J82S5, CN=Developer ID Application: Medeo (5TJZ8J82S5), OID.0.9.2342.19200300.100.1.1=5TJZ8J82S5

[Issuer]

C=US, O=Apple Inc., OU=Apple Certification Authority, CN=Developer ID Certification Authority

[Serial Number]

67D5A56F672A53C9

[Not Before]

04/07/2023 14:36:04

[Not After]

01/02/2027 23:12:15

[Thumbprint]

5467E477BAC46A3E8E83A8BDBB18A24F48B13595

Remote Tools

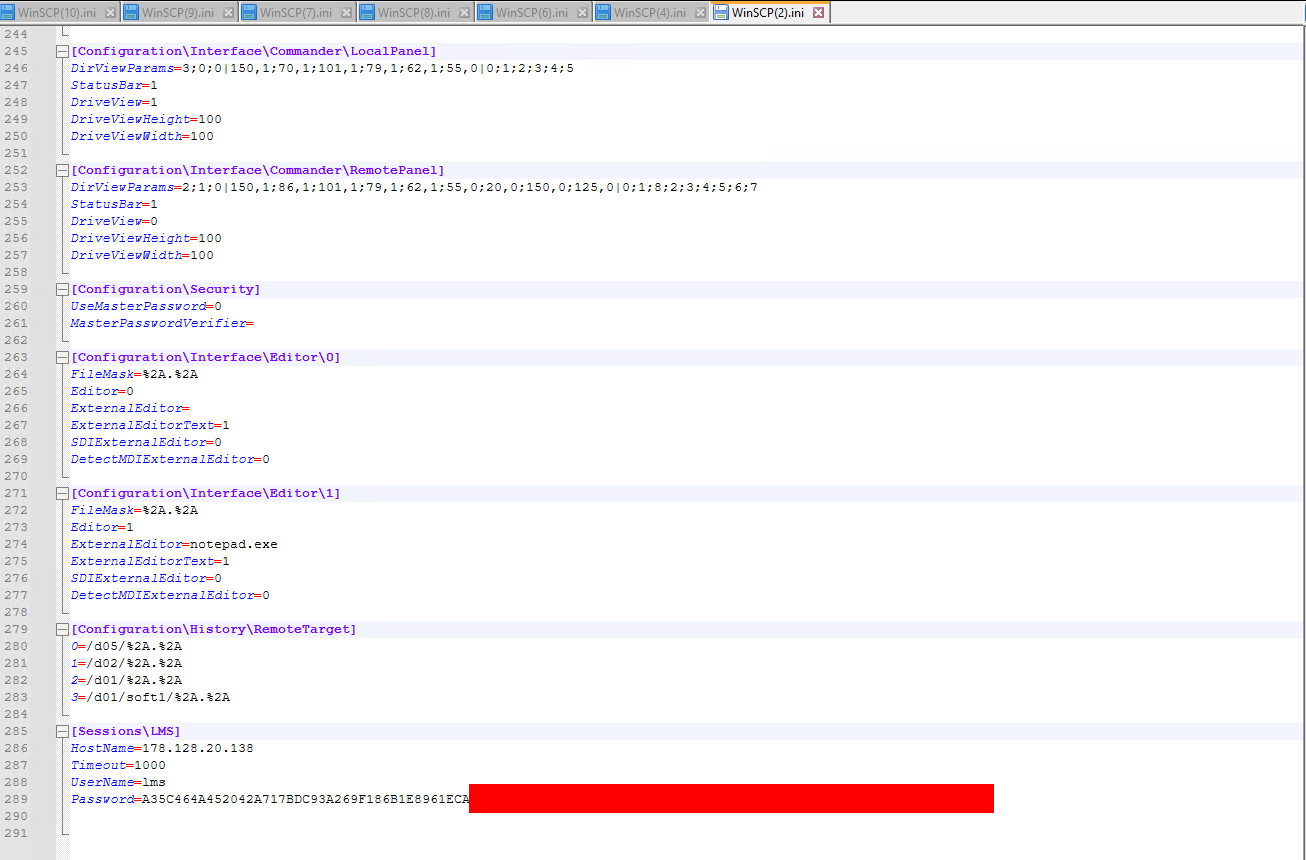

Winscp

WinSCP config files are well known to be insecure encrypted, meaning it is possible to simply restore the passwords…

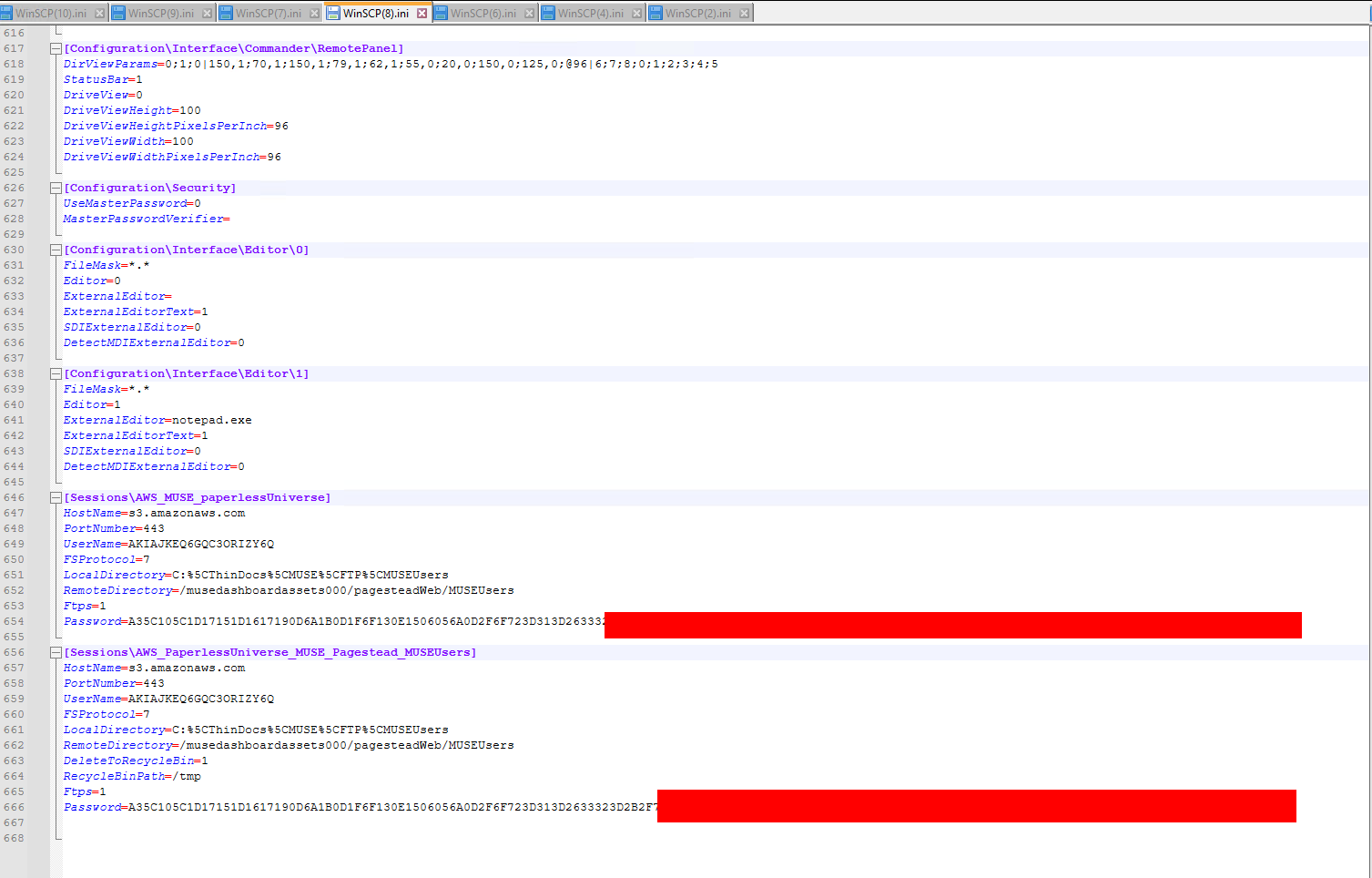

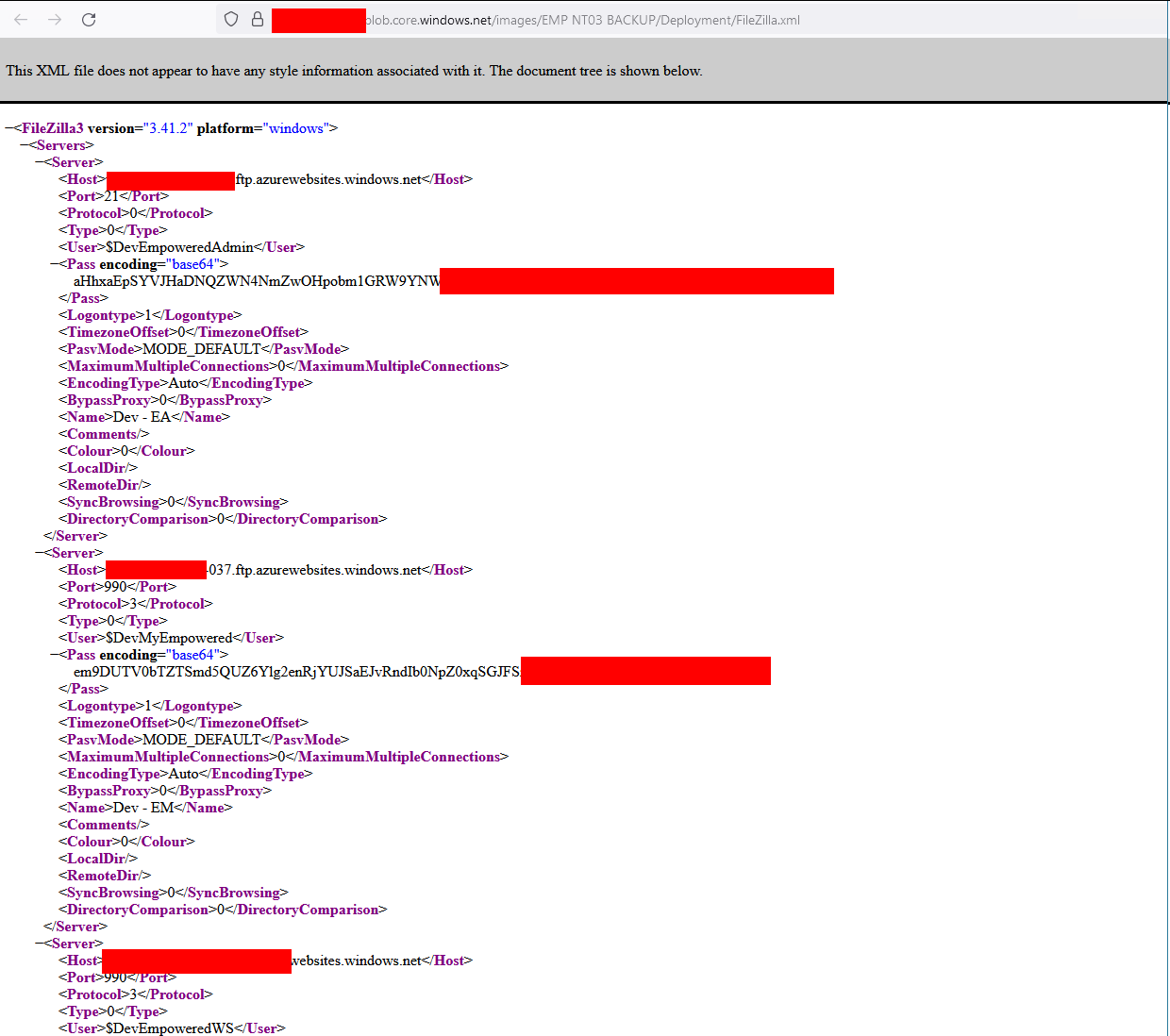

FileZilla

<Pass encoding="base64"> I guess you can see where this is going

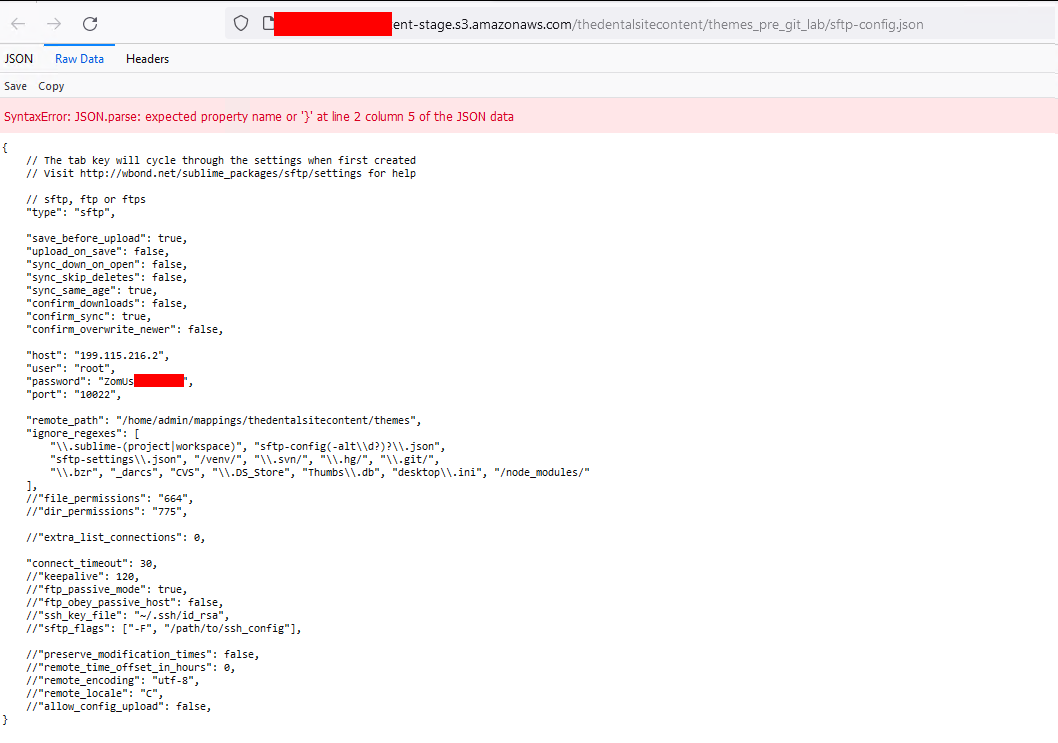

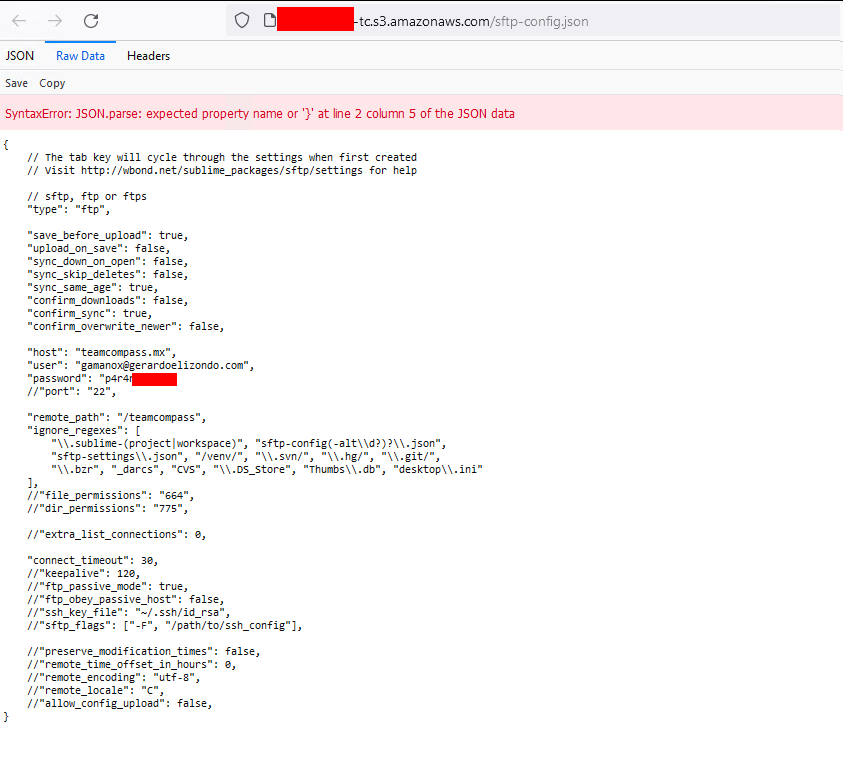

Sublime

The config for sublime does sometimes hold sftp credentials.

Password Manager

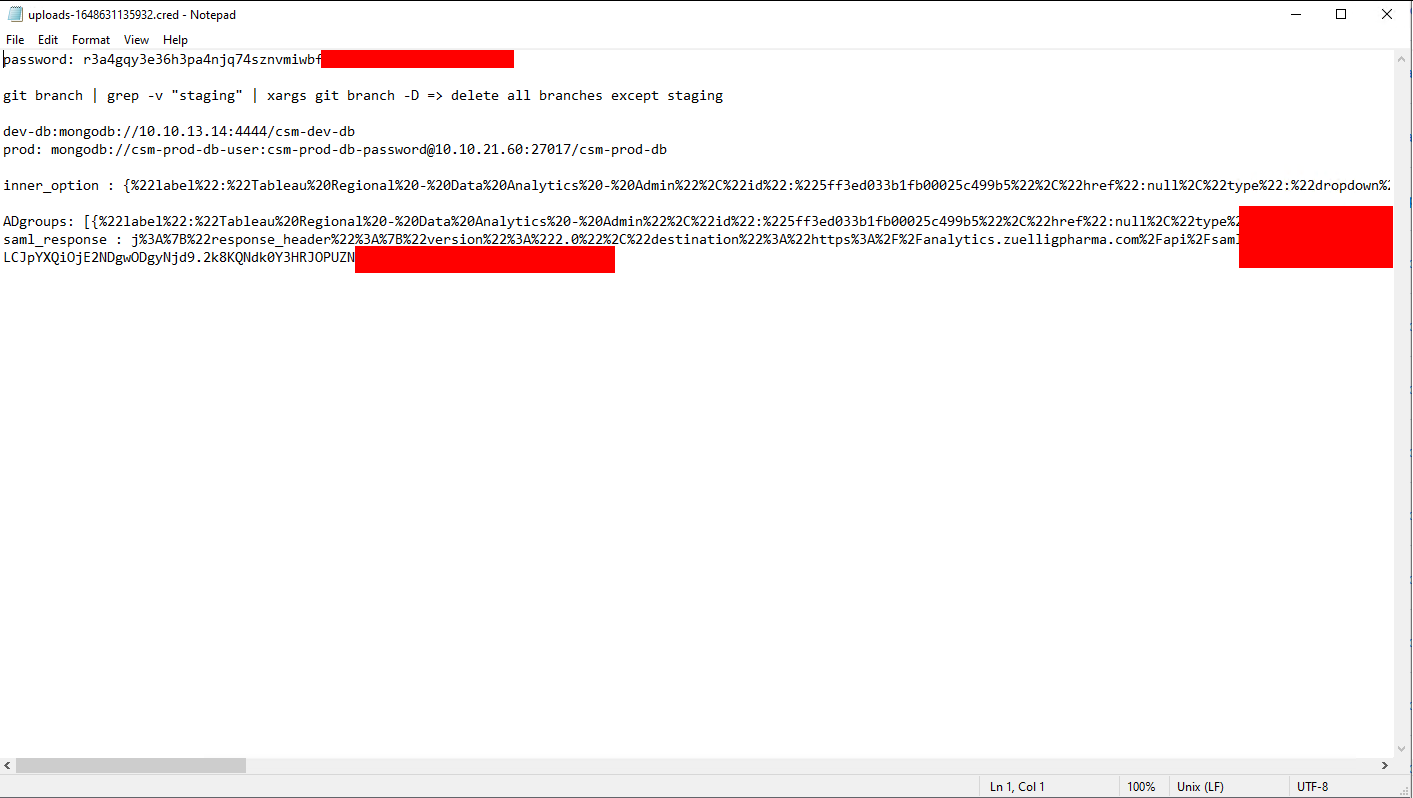

Some people make their own extensions for files, like .pass or .creds and then store their credentials in cleartext inside. Additional there are some keepass databases which can be found, however this would make it necessary to crack the master password, which is no fun as it is really slow.

Sample for a selfmade password “safe”

Sample for a selfmade password “safe”

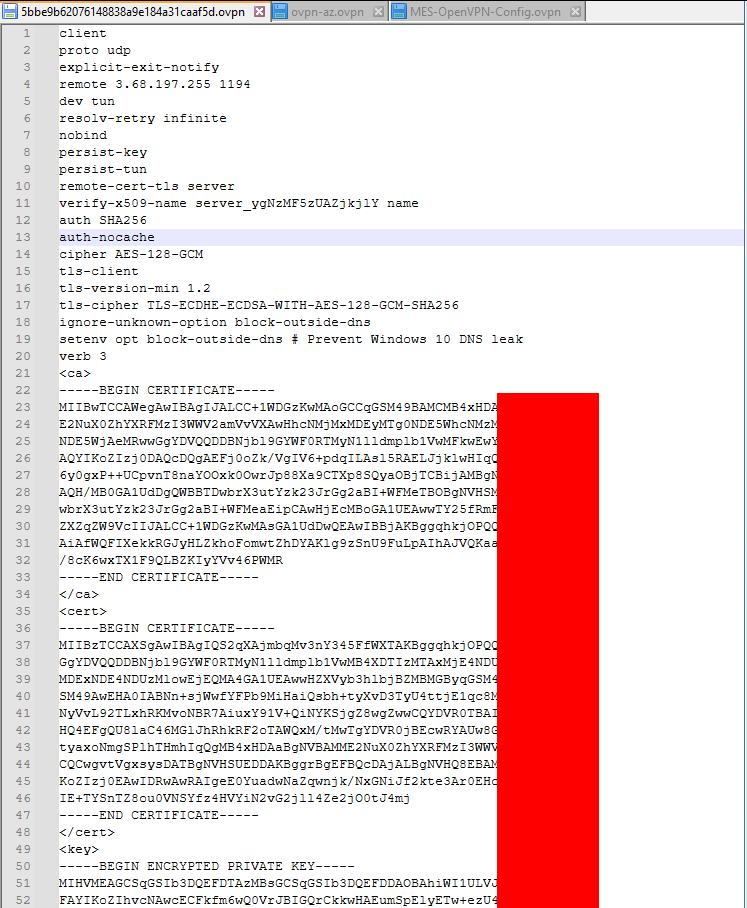

VPN

OpenVPN config files are interesting, as some of them do not require a user-pass-authentication. This would allow it to connect to the VPN…

Sampe ovpn config without user-pass-authentication

Sampe ovpn config without user-pass-authentication

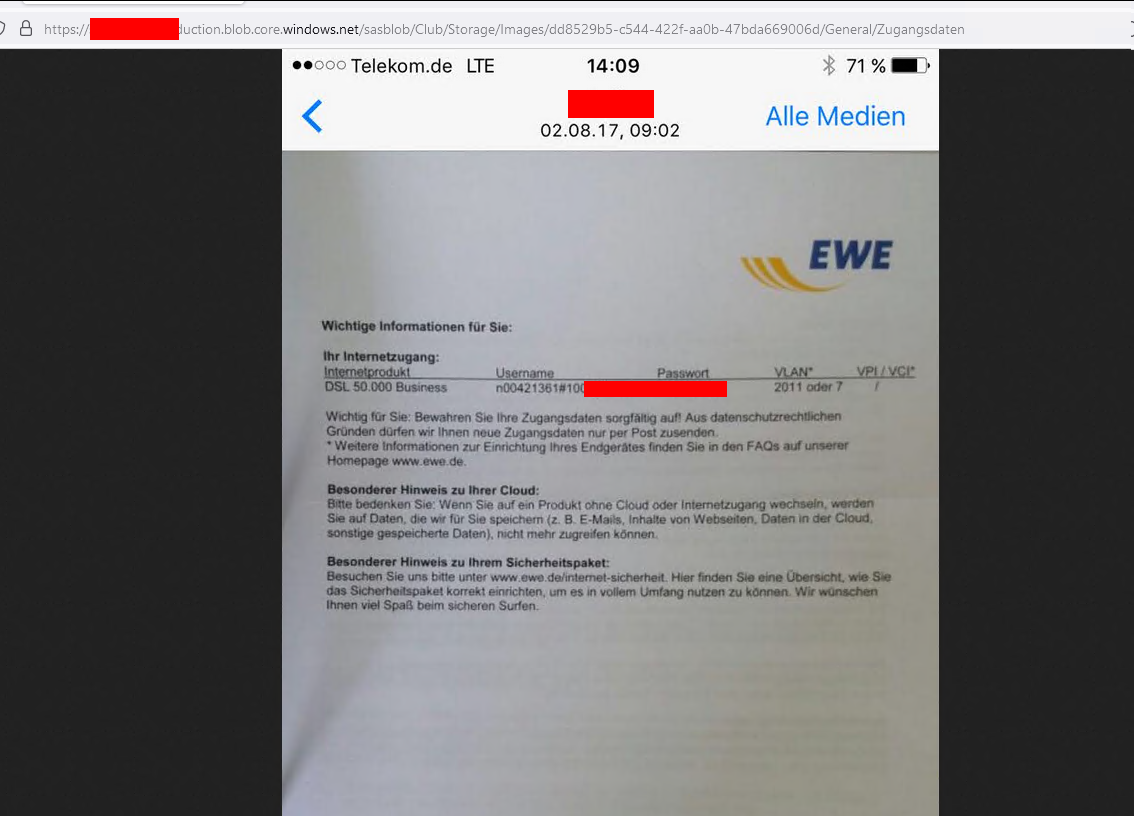

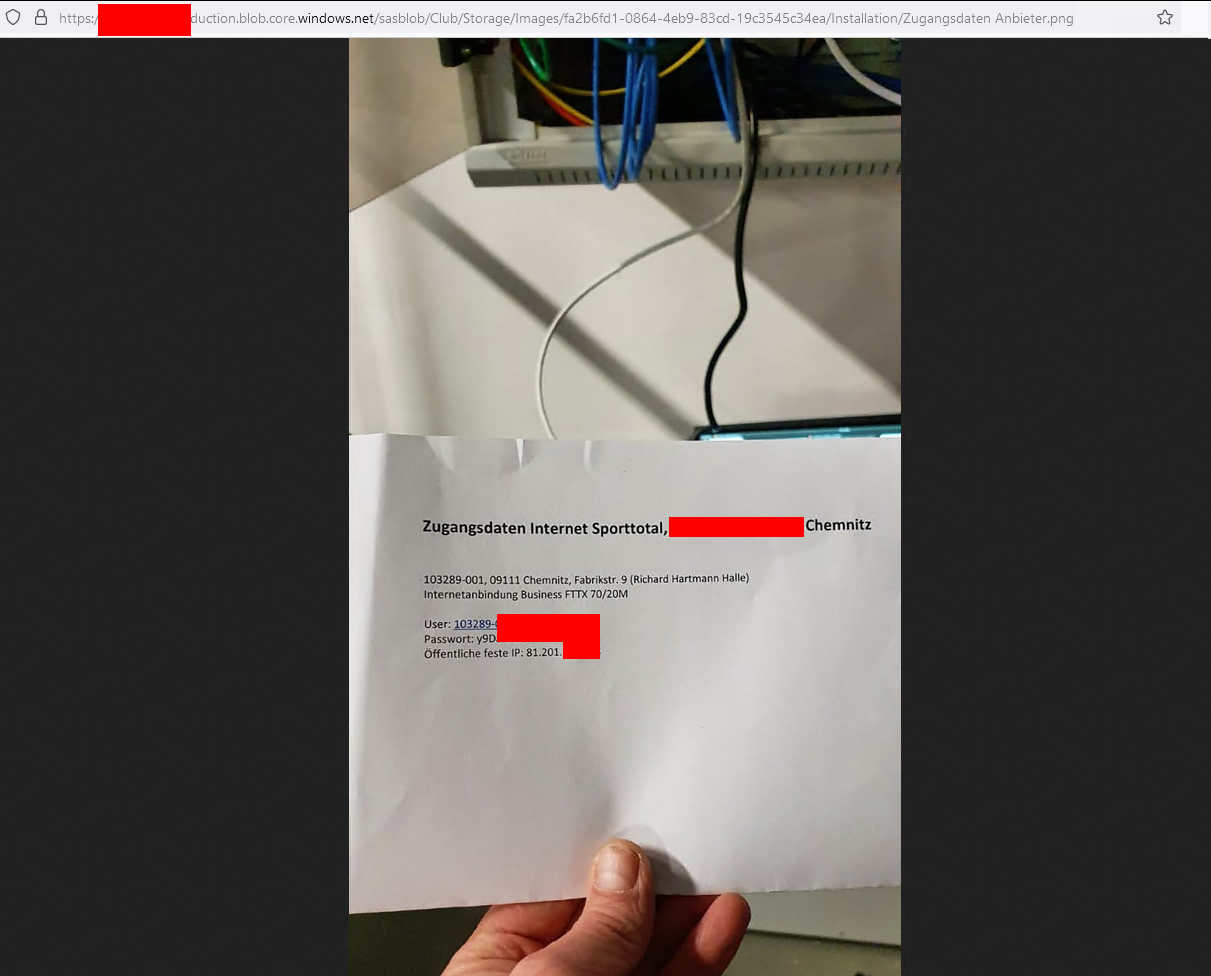

Other

Some companies also store their ticket data in the buckets and there might be juicy information inside.

Credentials for a ISP Portal #1

Credentials for a ISP Portal #1

Credentials for a ISP Portal #2

Credentials for a ISP Portal #2

Software configurations

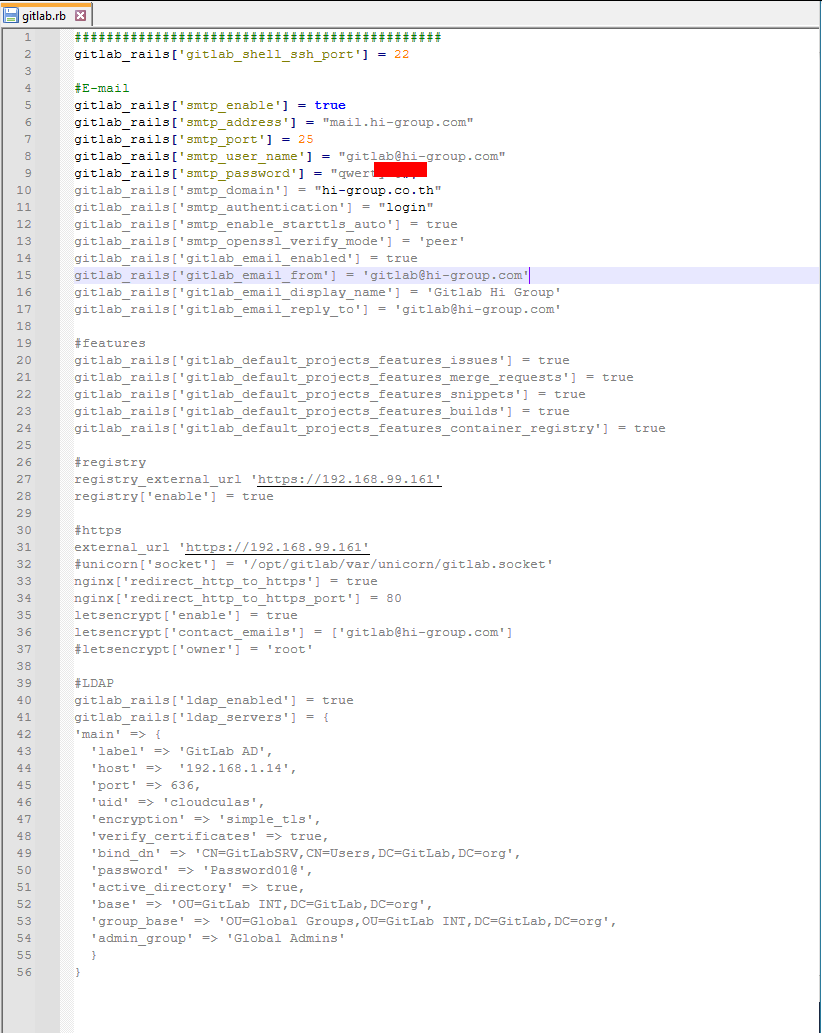

Gitlab

Gitlab config files might also be juicy, as there is typically an SMTP configured.

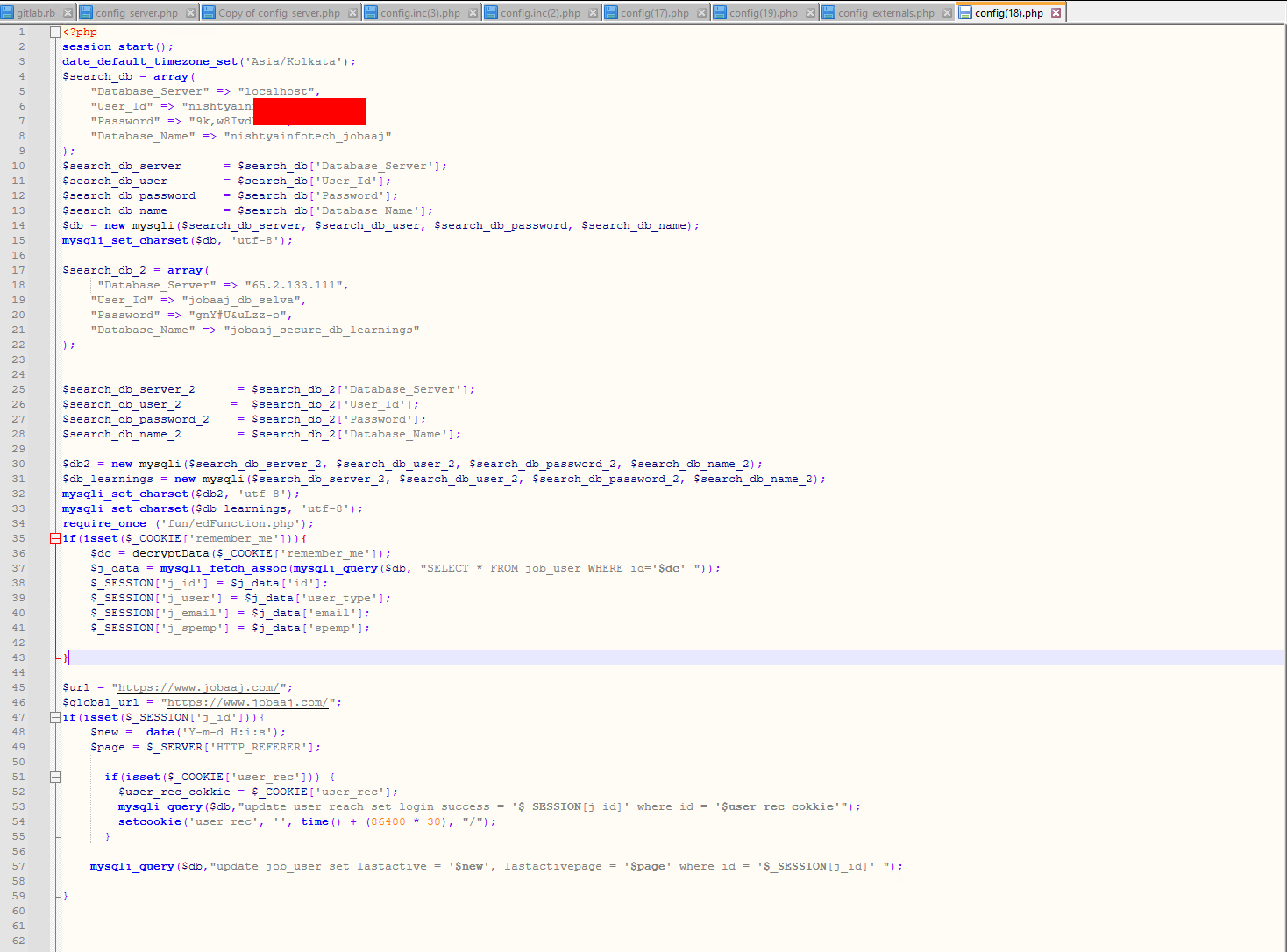

config.php

Not really a surprise, PHP config files are crazy and a lot of credentials can be found.

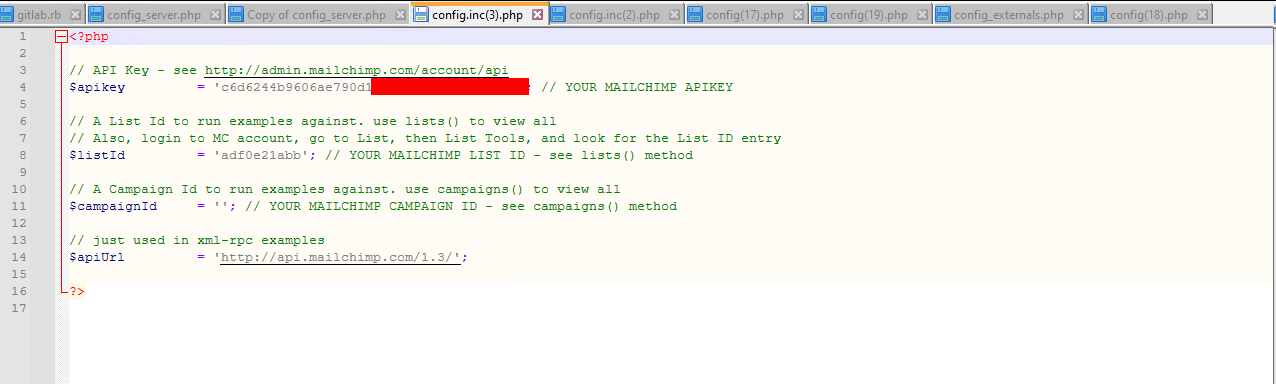

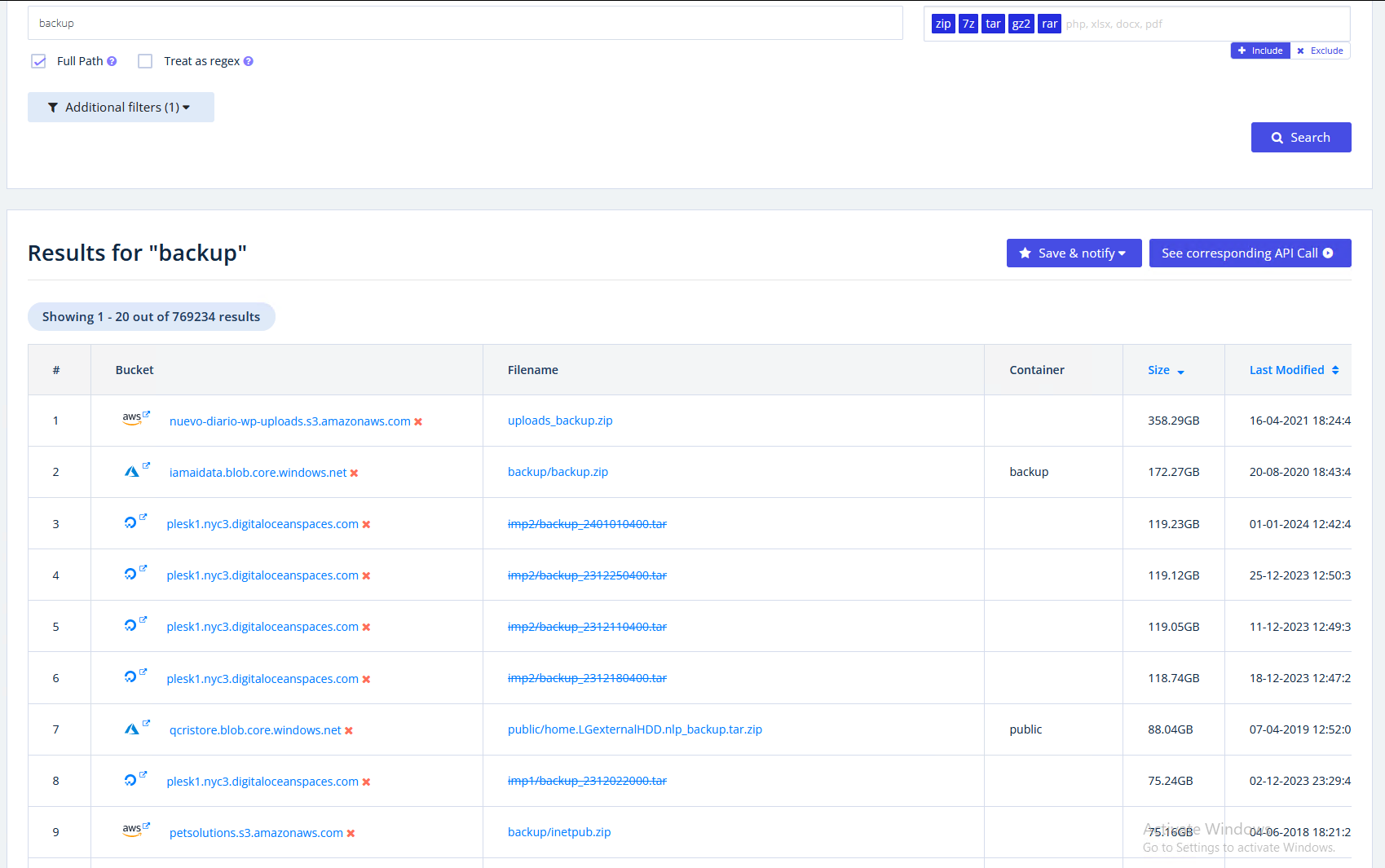

Backups

There are also complete Backups public availible, sometimes with a size of several Terrabyte. Of course they still might be encrypted, but yeah, let’s keep the hope.

Can’t loose the backup if it’s public available

Can’t loose the backup if it’s public available

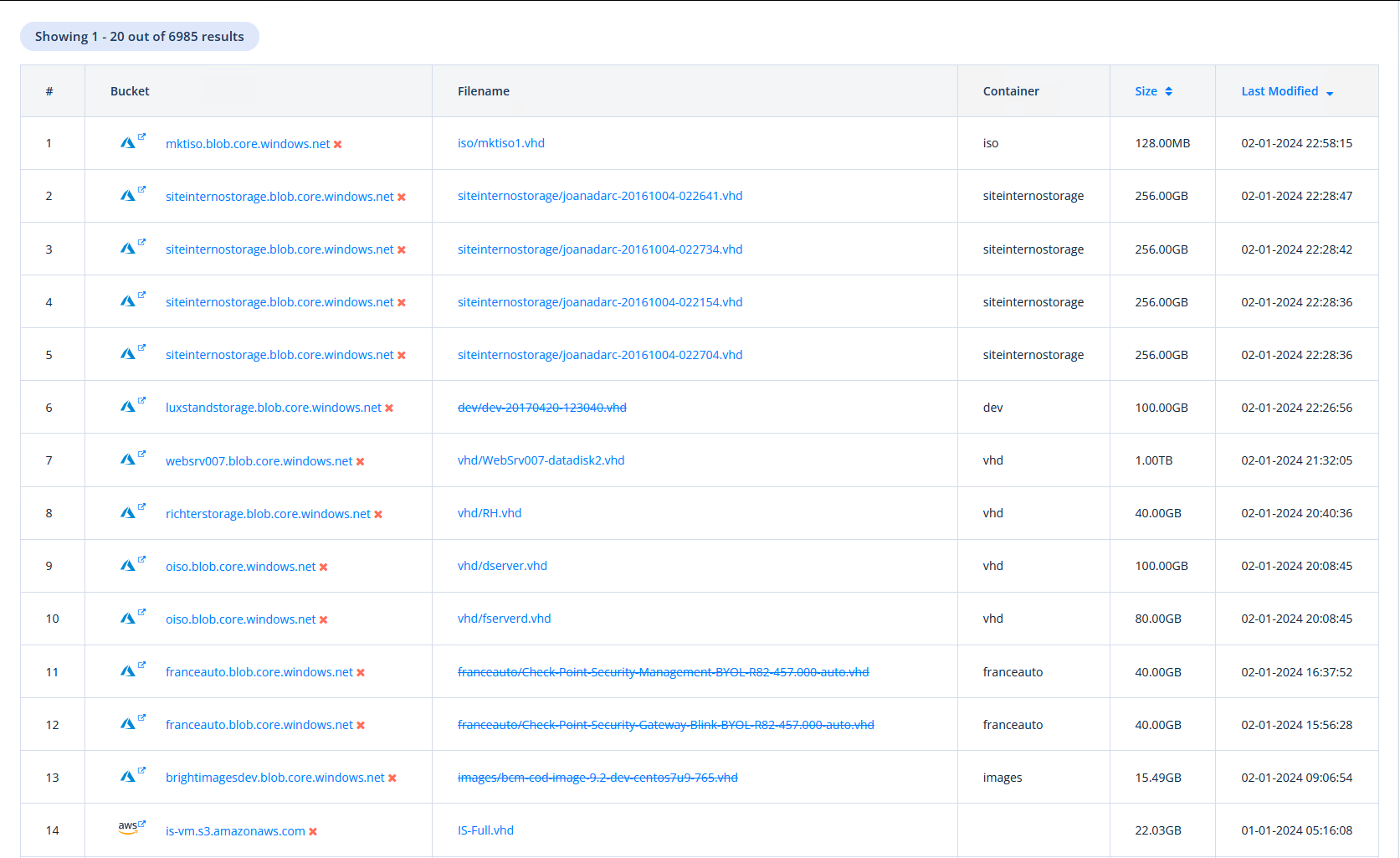

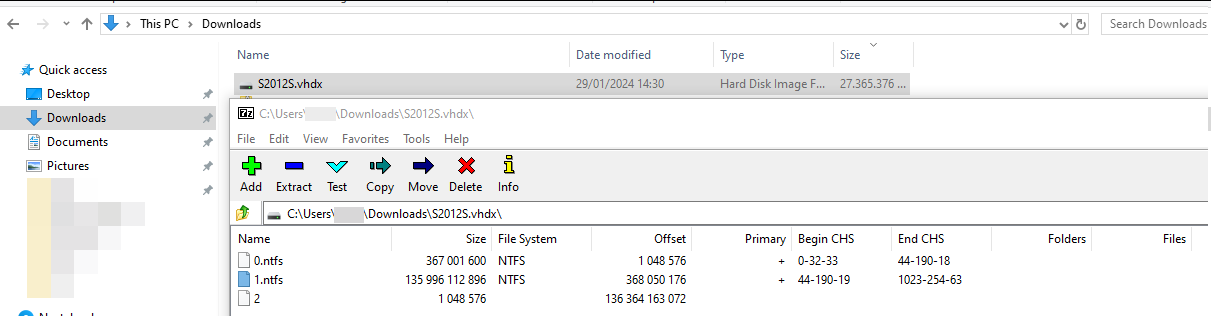

Virtual disks

Virtual disks can easily be openend with 7zip.

Openend virtual disk

Openend virtual disk

Mailstore is a solution for email archives, so this might not be good.

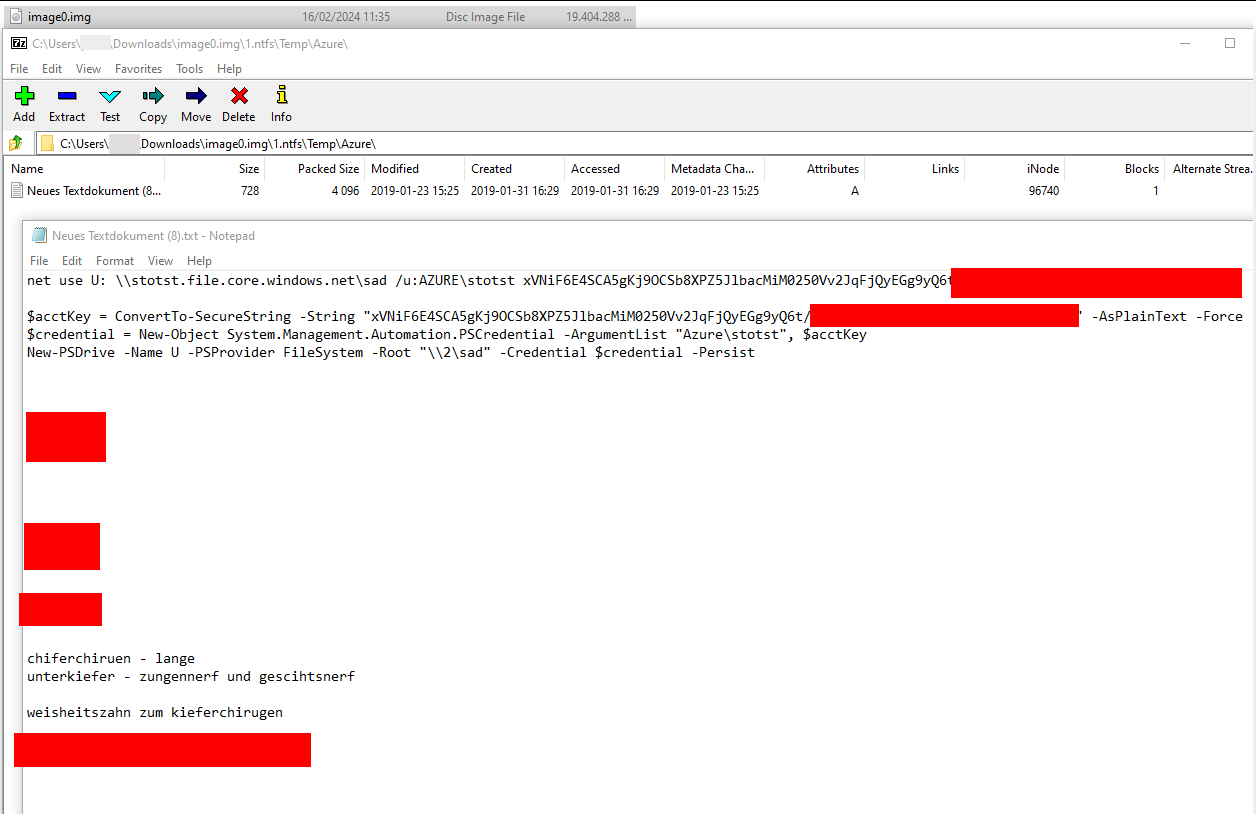

IMG files

The same goes for IMG files, they often also serve as backup.

IMG files

IMG files

Veeam & Acronis Backups

https://buckets.grayhatwarfare.com/files?extensions=vbk,tib&order=last_modified&direction=desc

Veeam & Acronis Backups are normally password protected. But if the password can be cracked, this will mostly be juicy.

Forensic Images

Forensic Images are full disc images, used during incident response. With the correct tools, they can be openend. There are for example the forensic tools for 7zip.

Archive files

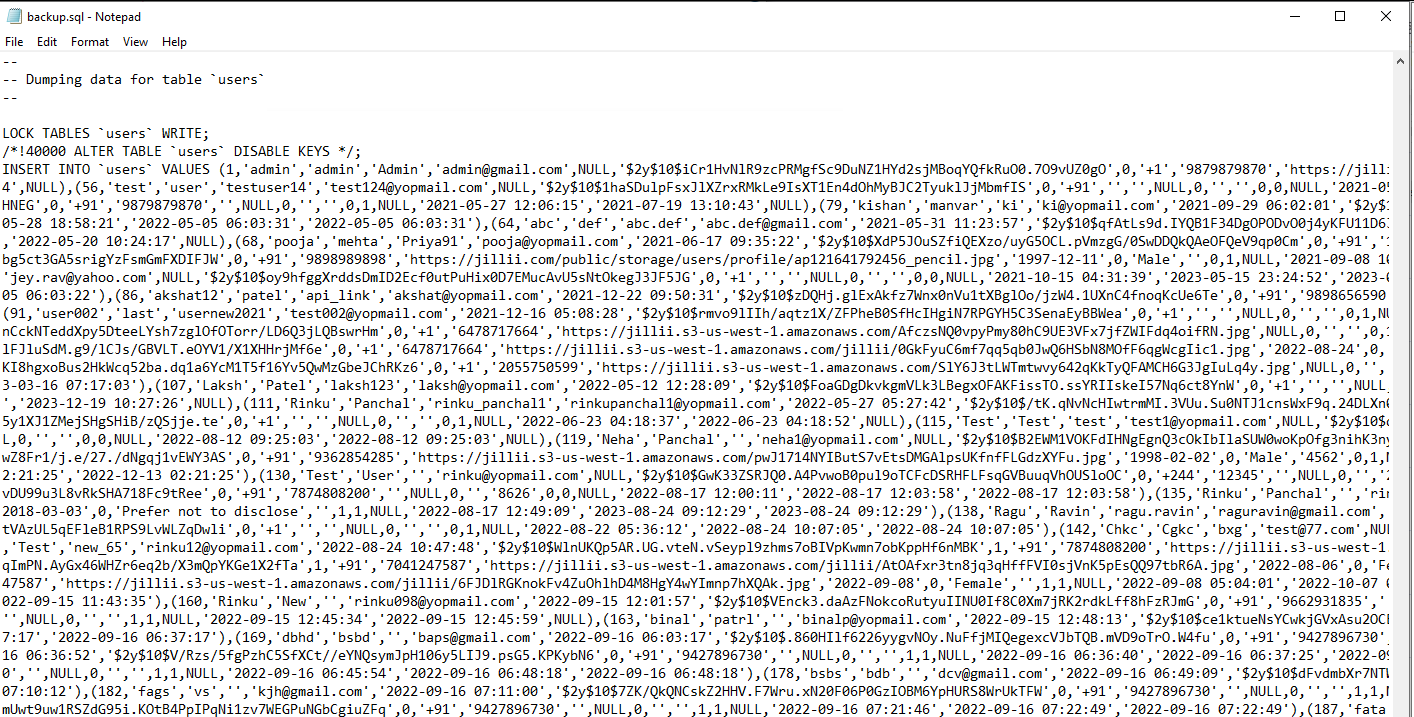

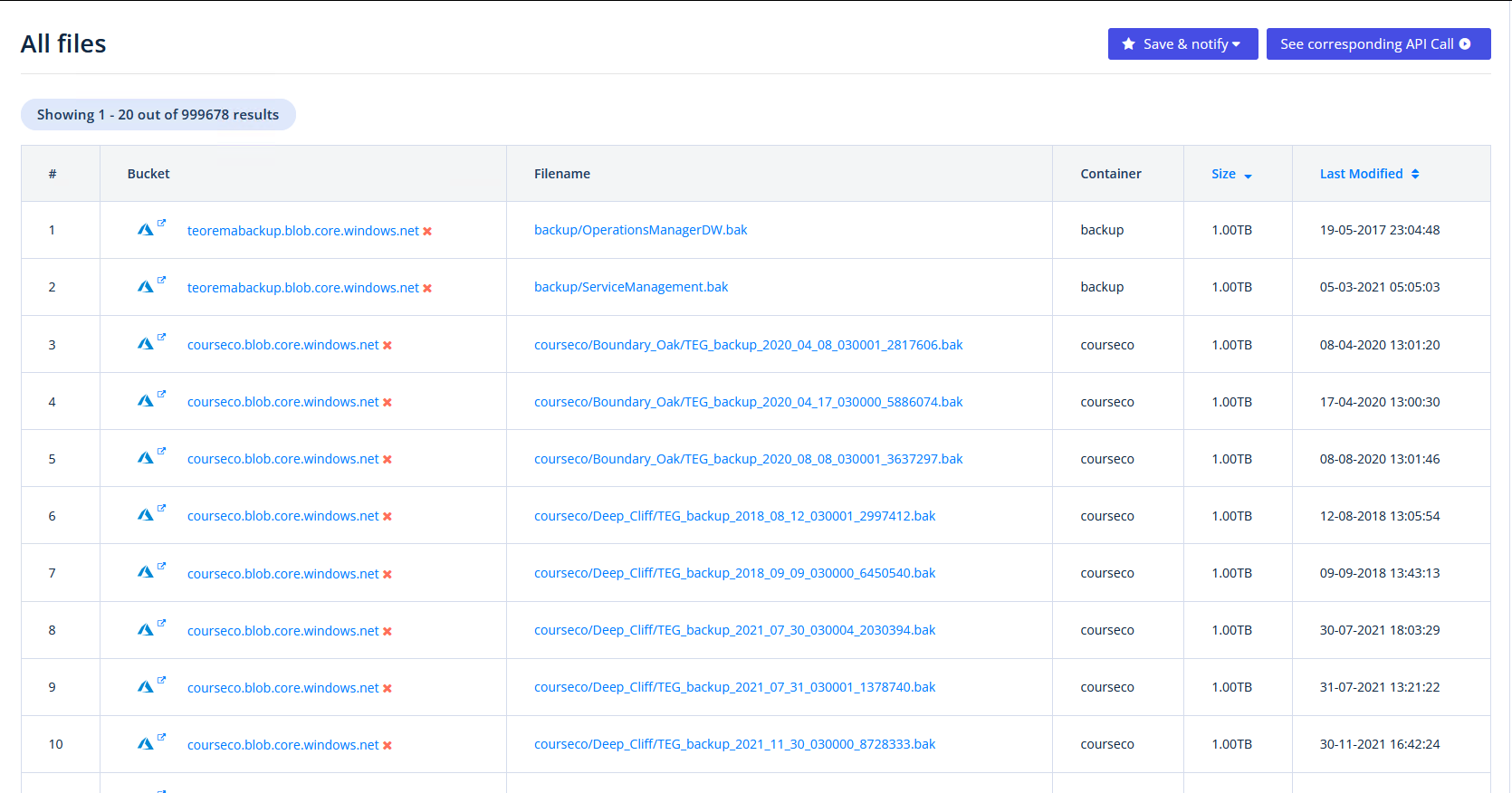

SQL

https://buckets.grayhatwarfare.com/files?extensions=bak&order=size&direction=desc&page=1

SQL backups are crazy, as they might leak complete application data including usercredentials and also payment data. And there are a lot of files…

BAK files server typically as MSSQL backups

BAK files server typically as MSSQL backups

MySQL Dumps

MySQL dumps also contain the complete data.

BSON files

Binary JSON files can also be used as backup and there some with quite interesting data.

BSON file with tokens for Firebase

BSON file with tokens for Firebase

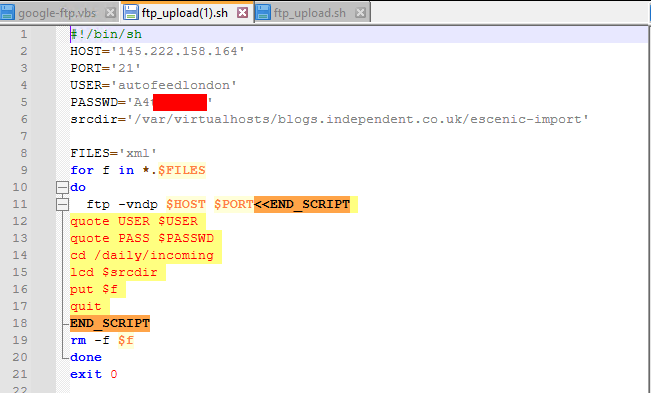

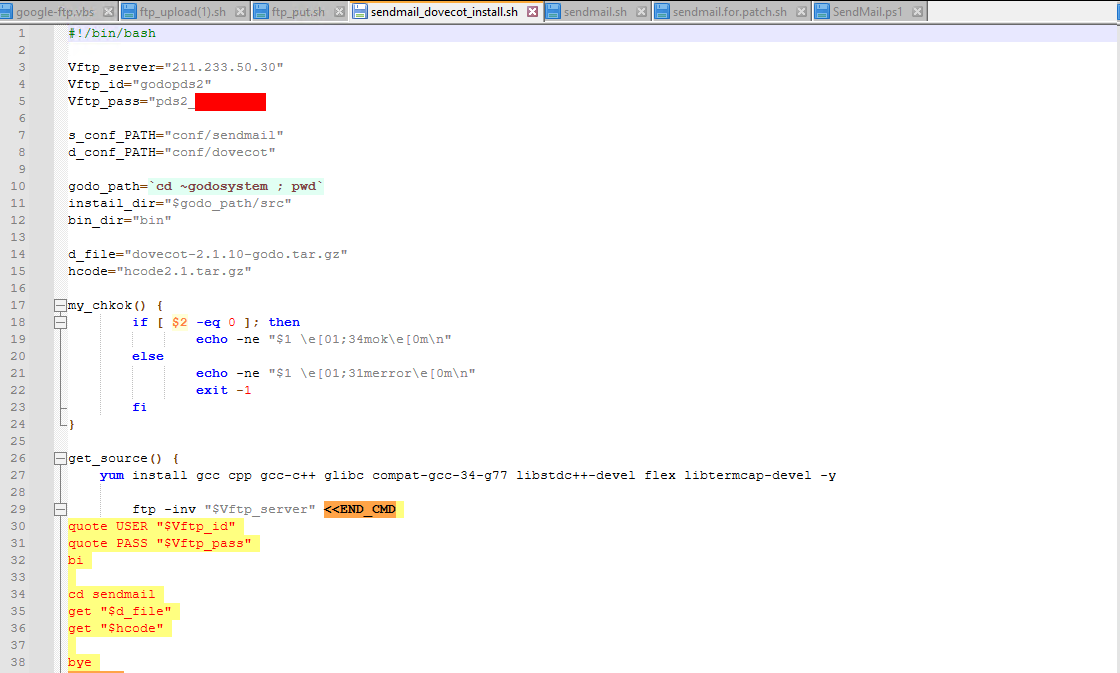

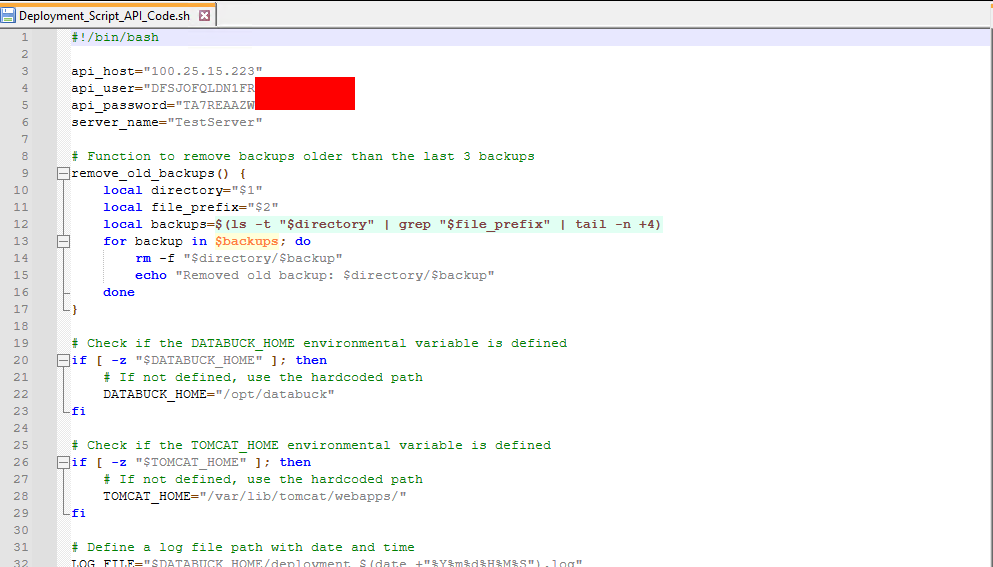

Scripts

Also no surprise, we can find a lot of the typical credentials in script files like vbs, cmd, bat , ps1, sh. The difficult thing here is to find a name which might contain credentials, as we can not grep through the files without downloading.

Bash script with AWS credentials

Bash script with AWS credentials

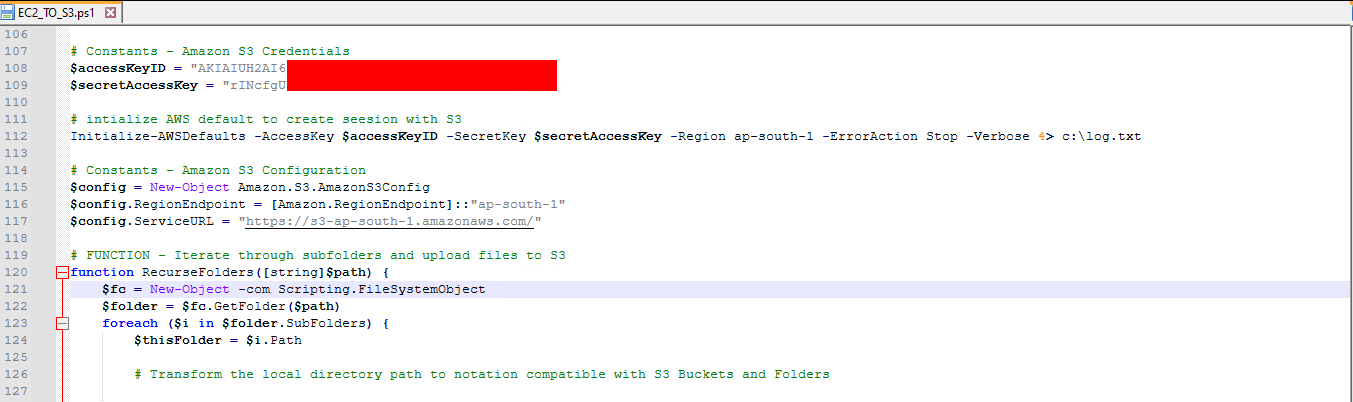

Powershell script with credentials

Powershell script with credentials

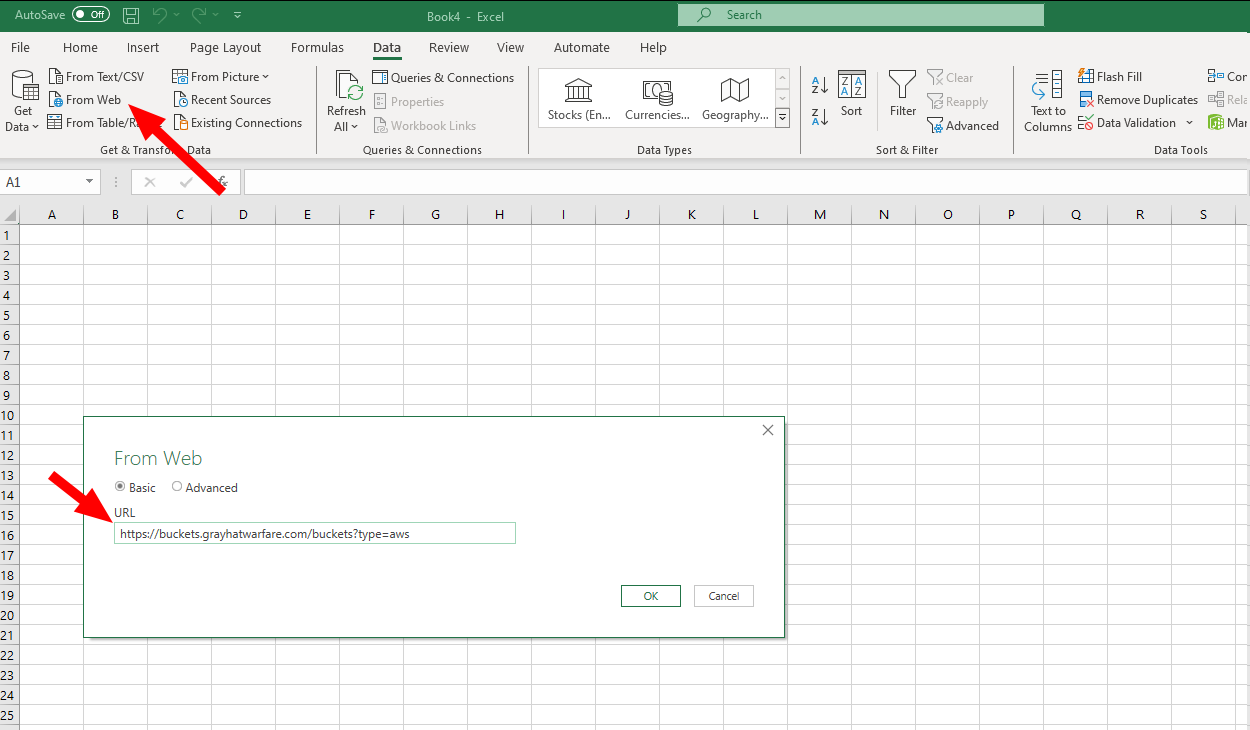

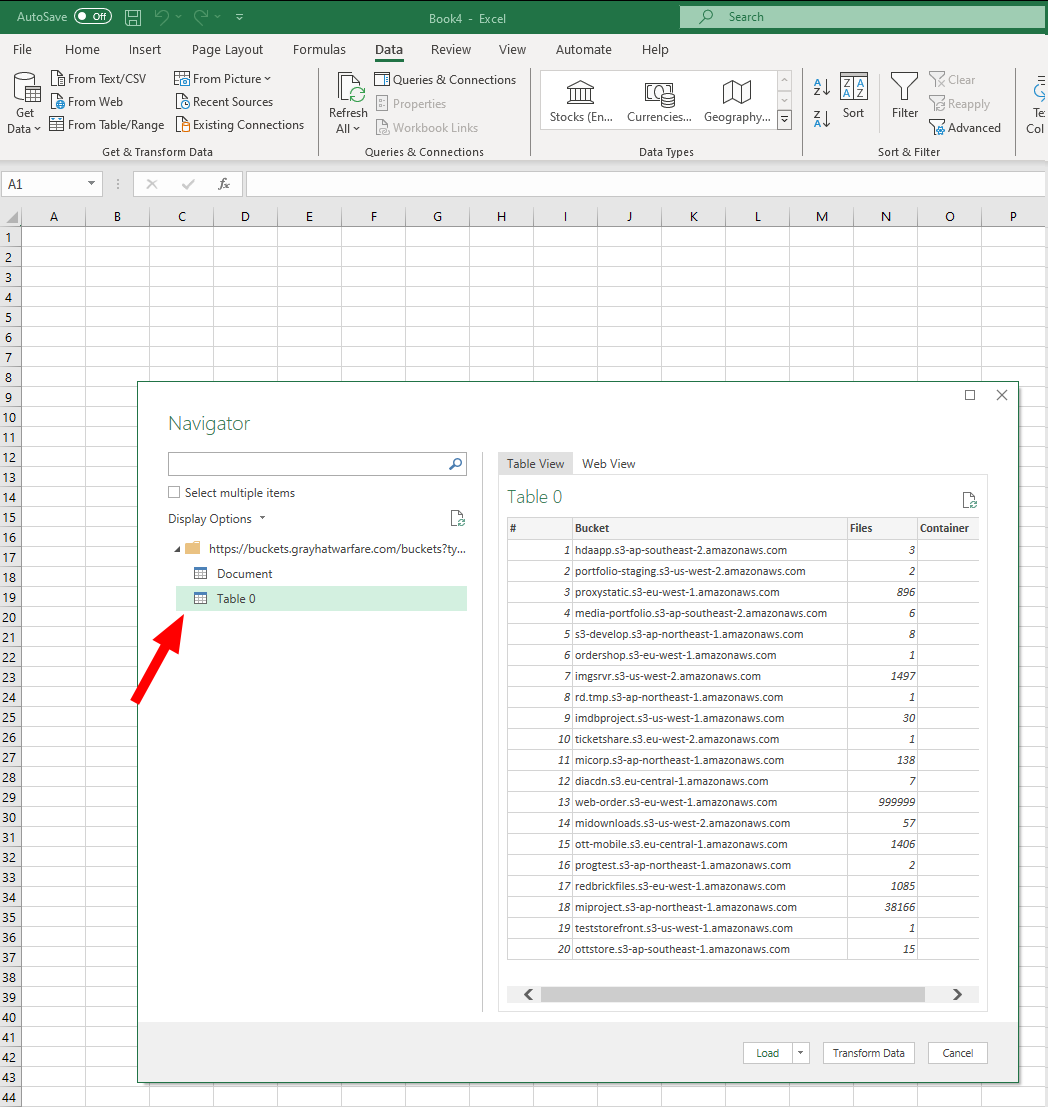

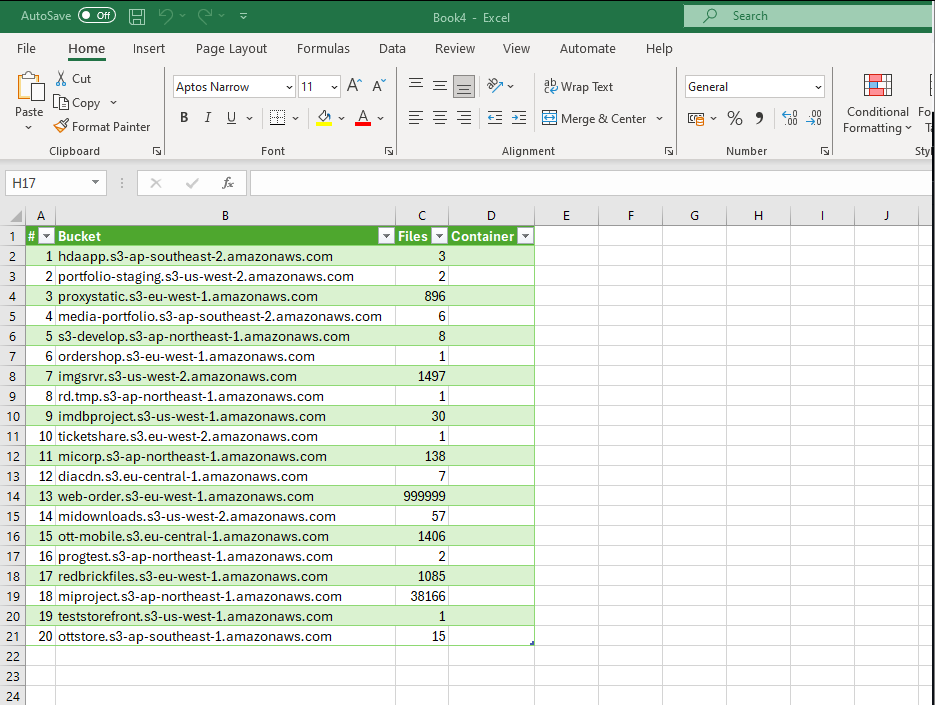

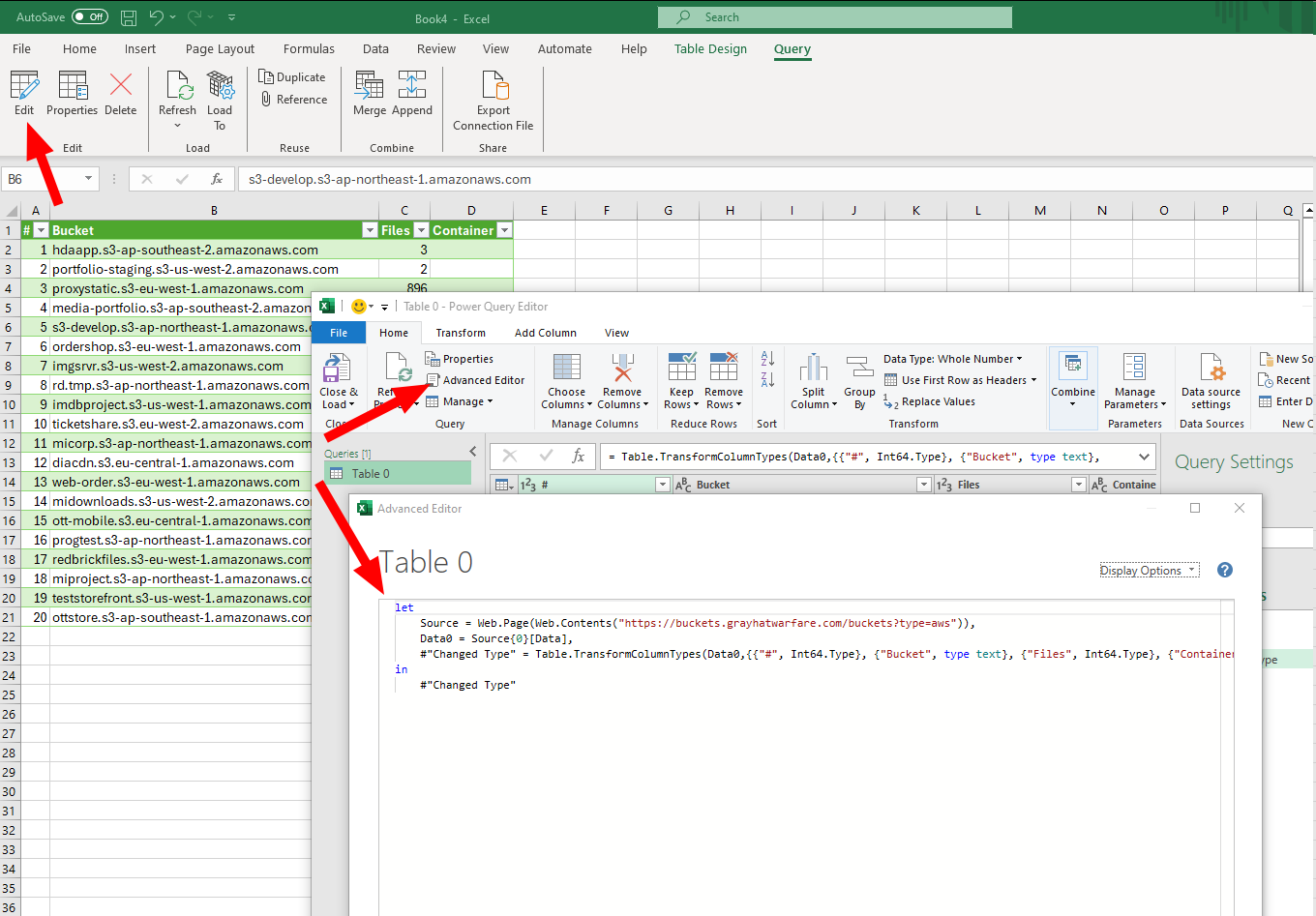

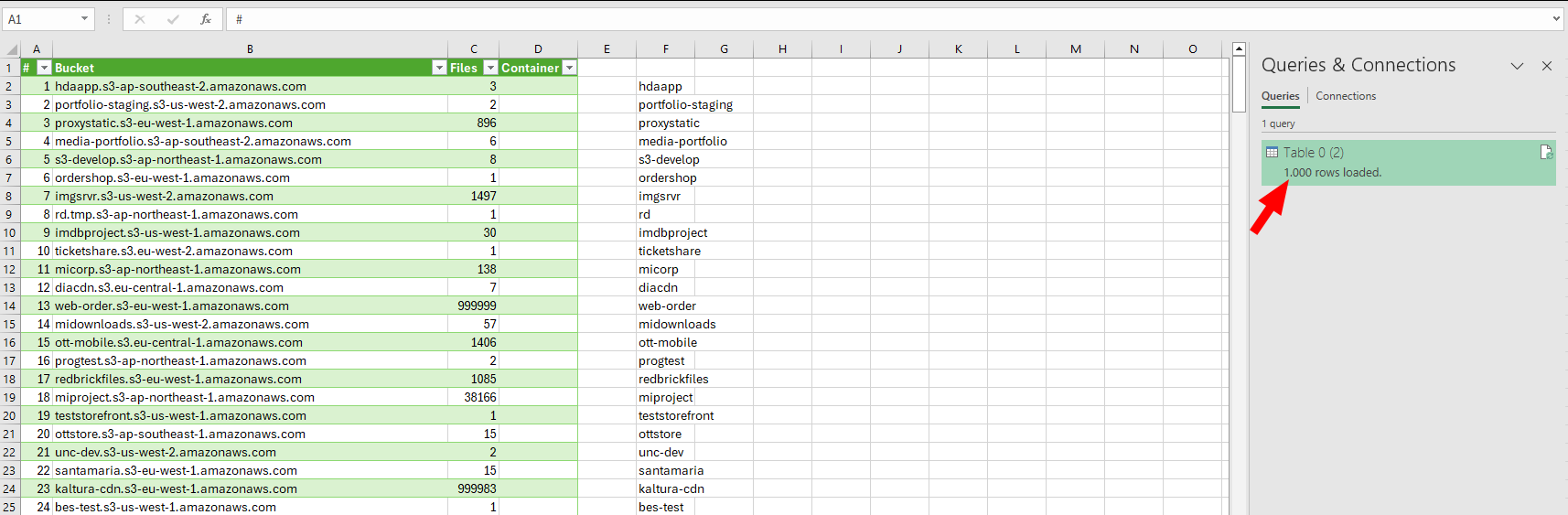

Excourse 1: Scraping data easily with Excel

Excel nowadays offers a nice feature for quickly gathering data from a webservice. Let’s say, we want to perform some checks on ourself for S3 buckets and therefore want a list.

https://buckets.grayhatwarfare.com/buckets?type=aws

We can now easily import this data in excel with a query.

But wait, now we only have the first page of data …

Easy!

let

// Define a function to get data for a specific page

GetDataForPage = (page) =>

let

Source = Web.Page(Web.Contents("https://buckets.grayhatwarfare.com/buckets?type=aws&page=" & Text.From(page))),

Data0 = Source{0}[Data],

#"Changed Type" = Table.TransformColumnTypes(Data0,{{"#", Int64.Type}, {"Bucket", type text}, {"Files", Int64.Type}, {"Container", type text}})

in

#"Changed Type",

// Use List.Generate to create a list of data for pages 1 to 50

Pages = List.Generate(() => 1, each _ <= 50, each _ + 1),

// Use Table.Combine to combine the data for all pages

CombinedData = Table.Combine(List.Transform(Pages, GetDataForPage))

in

CombinedData

Now we crawl 50 pages or 1000 rows.

1000 rows within 50 requests crawled

1000 rows within 50 requests crawled

This is not a rate limit bypass, meaning if you run into this issue, you need to tamper this a little more, maybe catspin or fireprox!

Excourse 2: AWS key validation

Check if the credentials are still valid.

root@ ~ [1]# aws configure --profile tmp

AWS Access Key ID [None]: AKIAIUH2AI65LLS3Y###

AWS Secret Access Key [None]: rINcfgU63NBUFaFJFP3kO9cR4sVX############

Default region name [None]: ap-south-1

Default output format [None]:

root@ ~# aws sts get-caller-identity --profile tmp

{

"UserId": "AIDAJLSWOFPMWAJPP5S36",

"Account": "140444460056",

"Arn": "arn:aws:iam::140444460056:user/chetan.awate"

}

root@ ~# aws s3 ls --profile tmp

2022-12-14 12:54:34 63moons-map-migrated

2022-12-14 12:55:14 a-presto-test-bucket-1

2022-12-14 12:55:46 amplify-myamplifytest-dev-163751-deployment

2022-12-14 12:56:15 astha-wfh-ec2

2022-12-14 12:56:45 astha-wfh-ec2-prod-serverlessdeploymentbucket-crtvtwylvb7o

[...]

2022-12-14 15:52:07 wave2-binaries

2022-12-14 15:52:10 zohobucket1

root@ ~# aws ec2 describe-instances --profile tmp

{

"Reservations": [

{

"Groups": [],

"Instances": [

{

"AmiLaunchIndex": 0,

"ImageId": "ami-7d5f2412",

"InstanceId": "i-0280648b8540ccbc0",

"InstanceType": "t2.medium",

"KeyName": "asterisk_server",

"LaunchTime": "2022-02-03T05:53:46.000Z",

"Monitoring": {

"State": "disabled"

},

"Placement": {

"AvailabilityZone": "ap-south-1a",

"GroupName": "",

"Tenancy": "default"

},

"PrivateDnsName": "ip-172-31-23-174.ap-south-1.compute.internal",

"PrivateIpAddress": "172.31.23.174",

[...]

Excourse 3: Download snippet

During my search it was quite nice to simply download a batch of files. A command for this might look like this. And yeah, there is quite a lot of space for improvements, but hey it should be working!

curl --request GET \

--url 'https://buckets.grayhatwarfare.com/api/v2/files?keywords=winscp&order=last_modified&direction=desc&extensions=ini&start=0&limit=100' \

--header 'Authorization: Bearer ###########' \

| jq | grep "url" | cut -d ":" -f 2- \

| sed 's/,//g' | xargs -i wget --no-check-certificate {}